Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Connect to Azure Data Lake Storage Data in Airbyte ELT Pipelines

Use CData Connect Cloud to build ELT pipelines for Azure Data Lake Storage data in Airbyte.

Airbyte empowers users to load your data into any data warehouse, data lake, or database. When combined with CData Connect Cloud, Airbyte users can create Extract, Load, Transform (ELT) pipelines directly from live Azure Data Lake Storage data. This article illustrates the process of connecting to Azure Data Lake Storage through Connect Cloud and constructing ELT pipelines for Azure Data Lake Storage data within Airbyte.

CData Connect Cloud offers a dedicated SQL Server interface for Azure Data Lake Storage, facilitating data querying without the need for data replication to a native database. With built-in optimized data processing capabilities, CData Connect Cloud efficiently directs all supported SQL operations, including filters and JOINs, straight to Azure Data Lake Storage. This harnesses server-side processing to swiftly retrieve the desired Azure Data Lake Storage data.

Configure Azure Data Lake Storage Connectivity for Airbyte

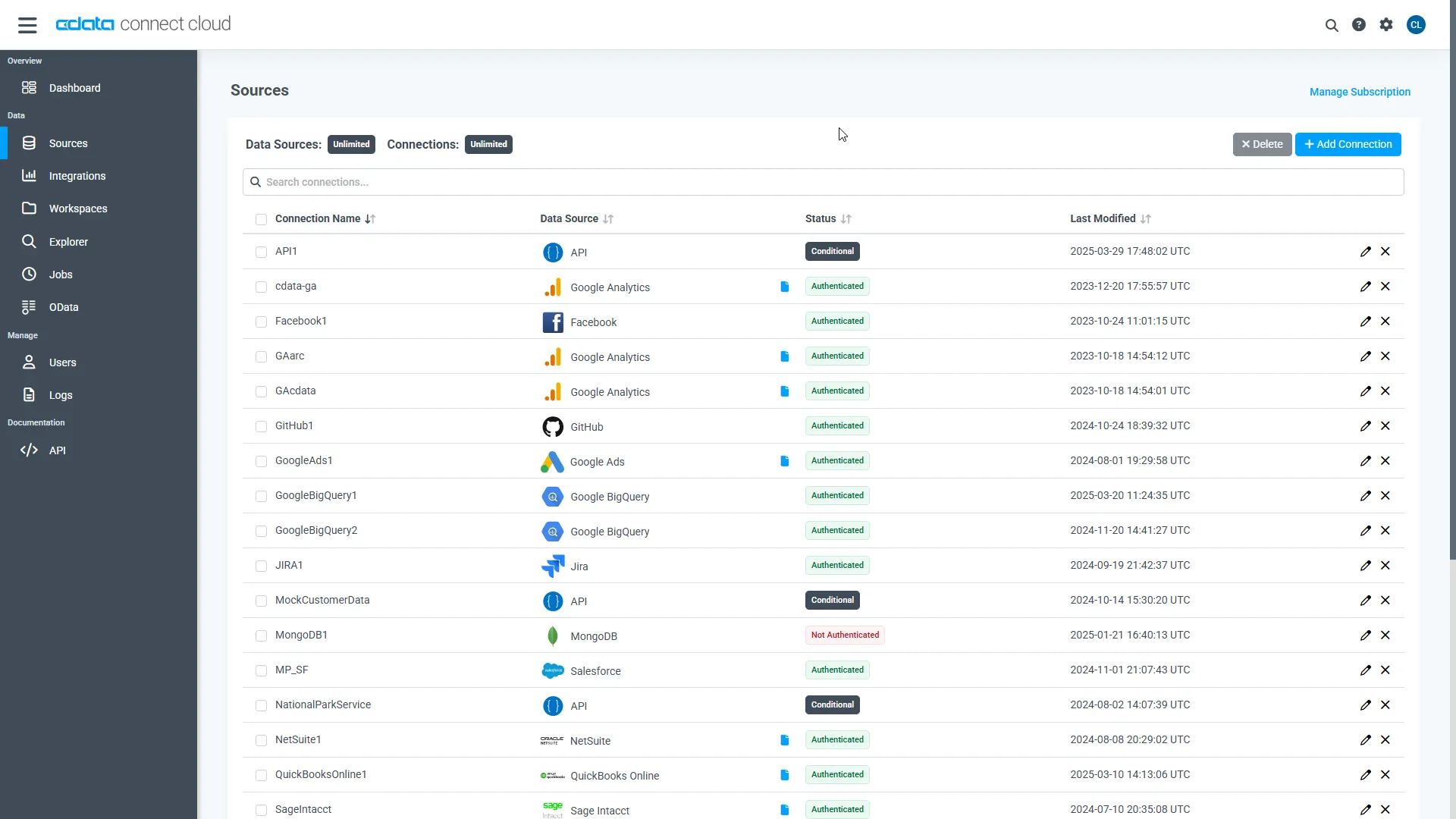

Connectivity to Azure Data Lake Storage from Airbyte is made possible through CData Connect Cloud. To work with Azure Data Lake Storage data from Airbyte, we start by creating and configuring a Azure Data Lake Storage connection.

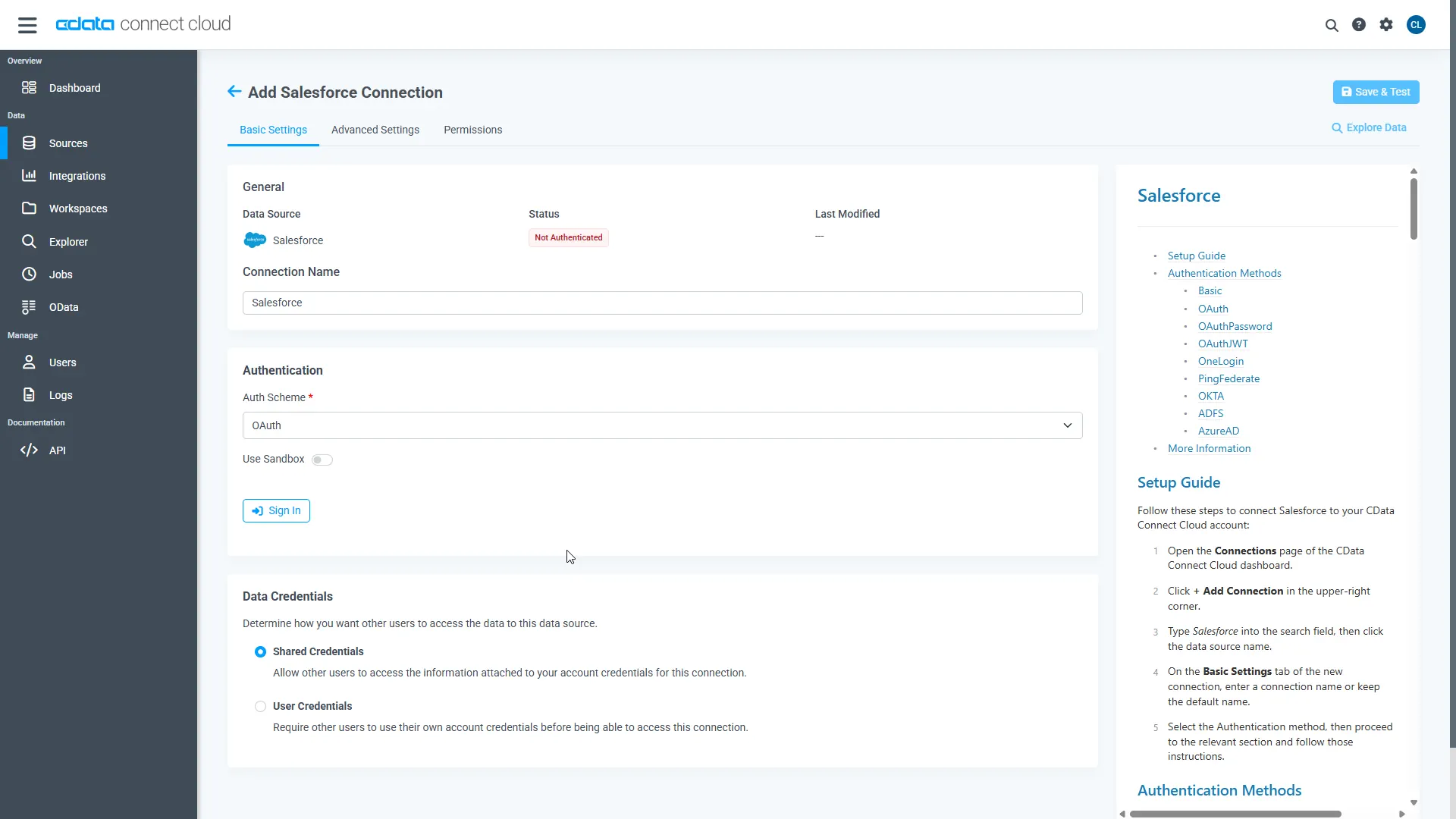

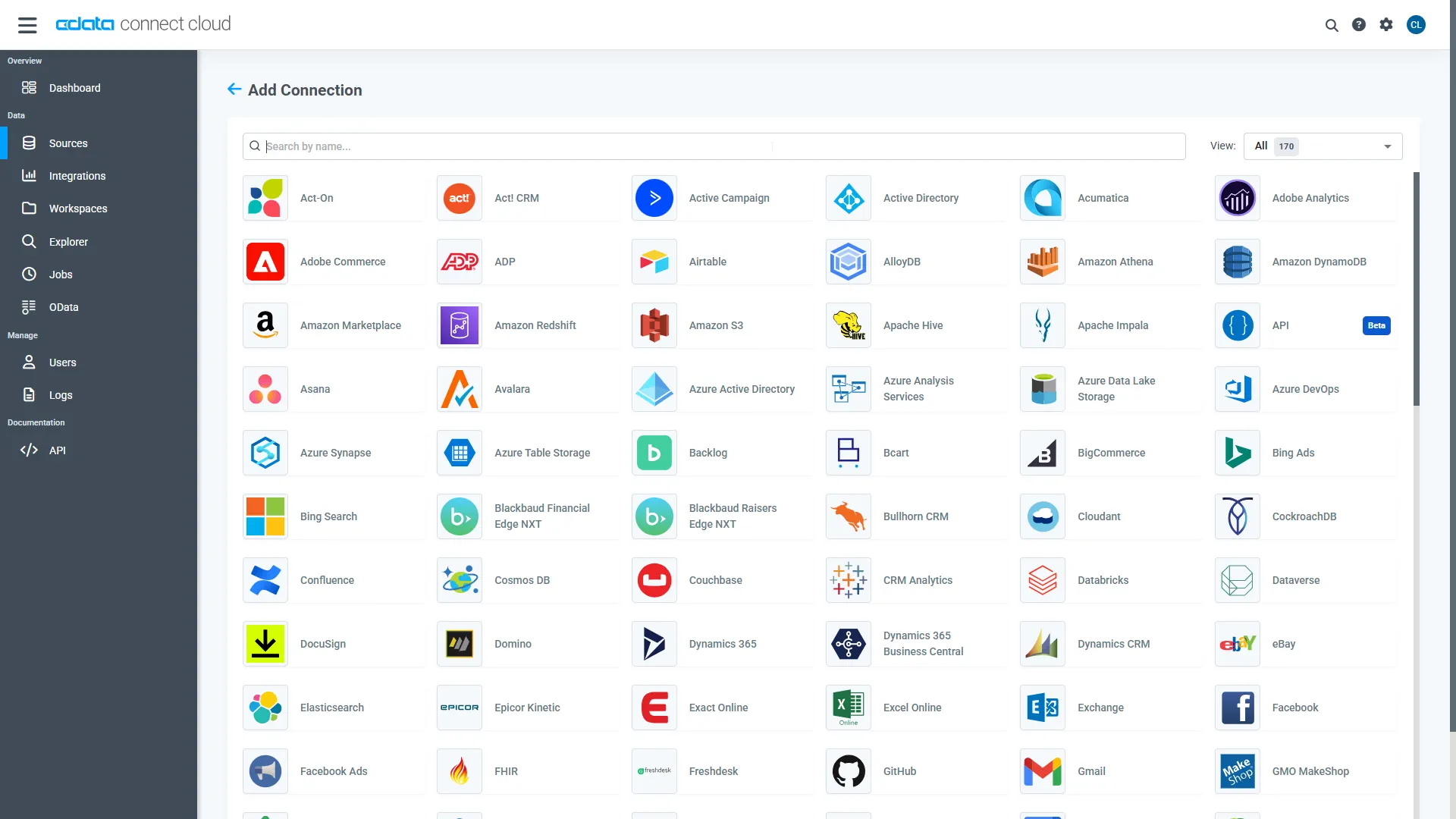

- Log into Connect Cloud, click Sources, and then click Add Connection

- Select "Azure Data Lake Storage" from the Add Connection panel

-

Enter the necessary authentication properties to connect to Azure Data Lake Storage.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Entra ID (formerly Azure AD) for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

- Click Create & Test

-

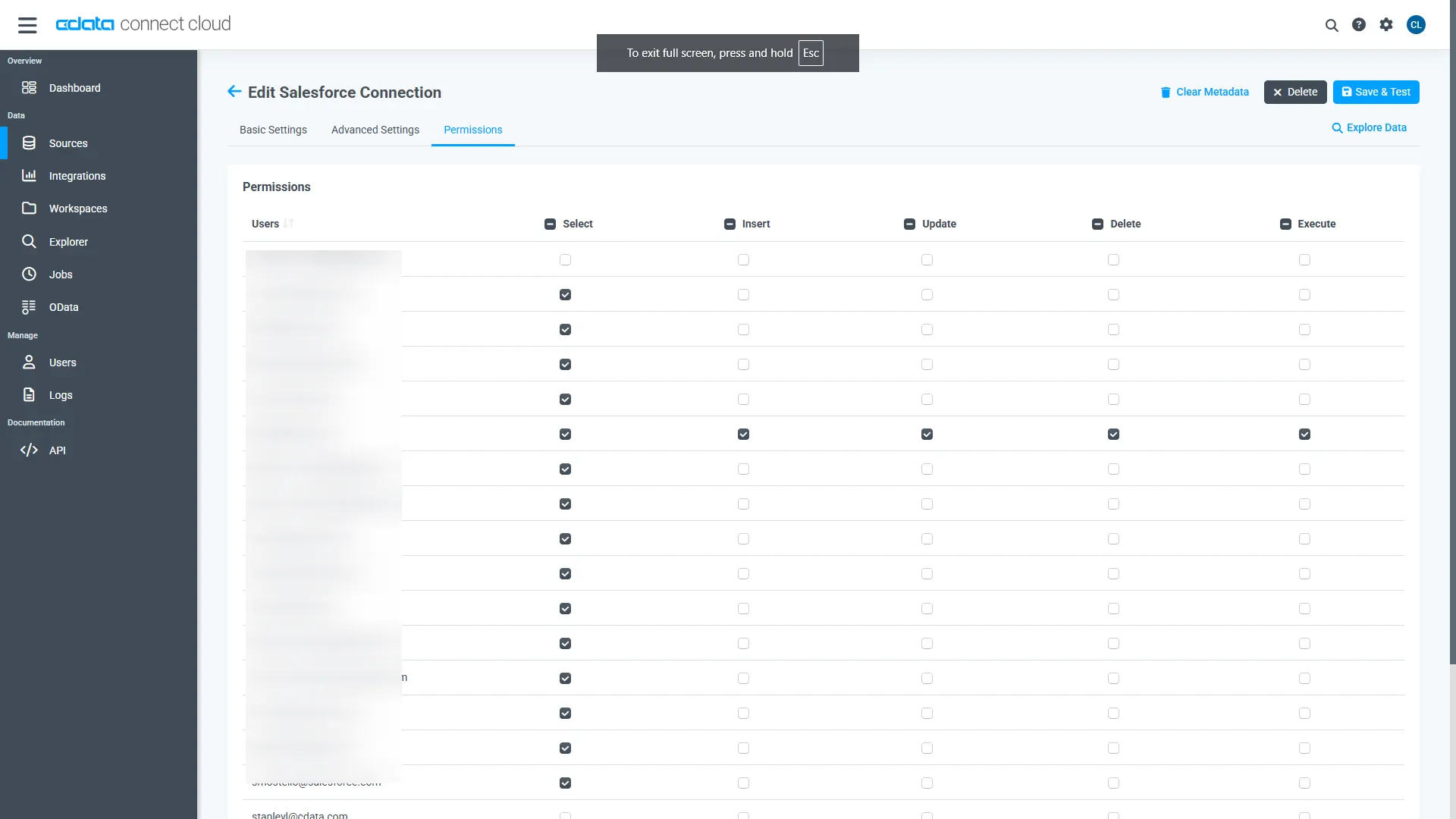

Navigate to the Permissions tab in the Add Azure Data Lake Storage Connection page and update the User-based permissions.

Add a Personal Access Token

When connecting to Connect Cloud through the REST API, the OData API, or the Virtual SQL Server, a Personal Access Token (PAT) is used to authenticate the connection to Connect Cloud. It is best practice to create a separate PAT for each service to maintain granularity of access.

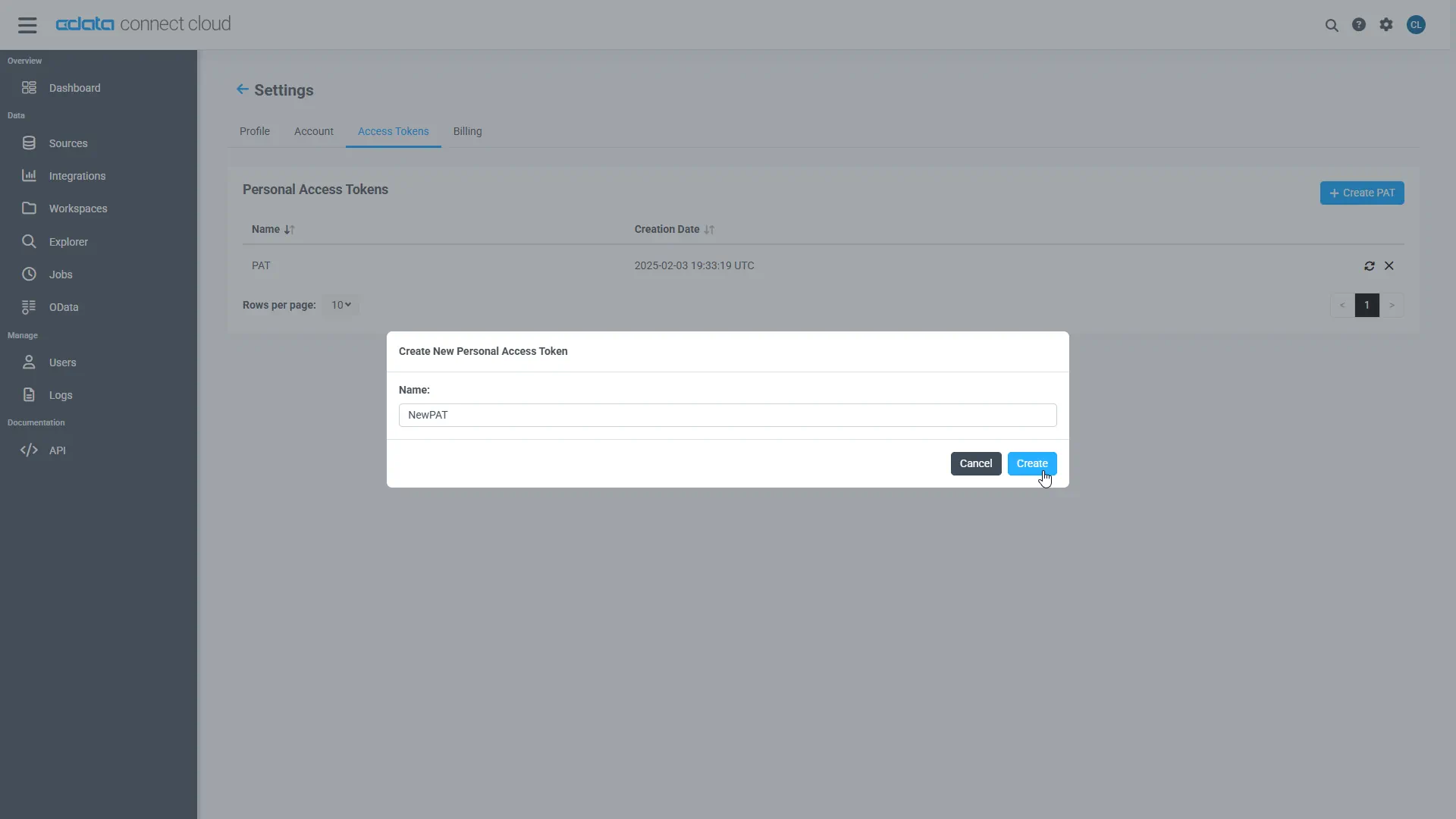

- Click on the Gear icon () at the top right of the Connect Cloud app to open the settings page.

- On the Settings page, go to the Access Tokens section and click Create PAT.

-

Give the PAT a name and click Create.

- The personal access token is only visible at creation, so be sure to copy it and store it securely for future use.

With the connection configured and a PAT generated, you are ready to connect to Azure Data Lake Storage data from Airbyte.

Connect to Azure Data Lake Storage from Airbyte

To establish a connection from Airbyte to CData Connect Cloud, follow these steps.

- Log in to your Airbyte account

- On the left panel, click Sources, then Add New Source

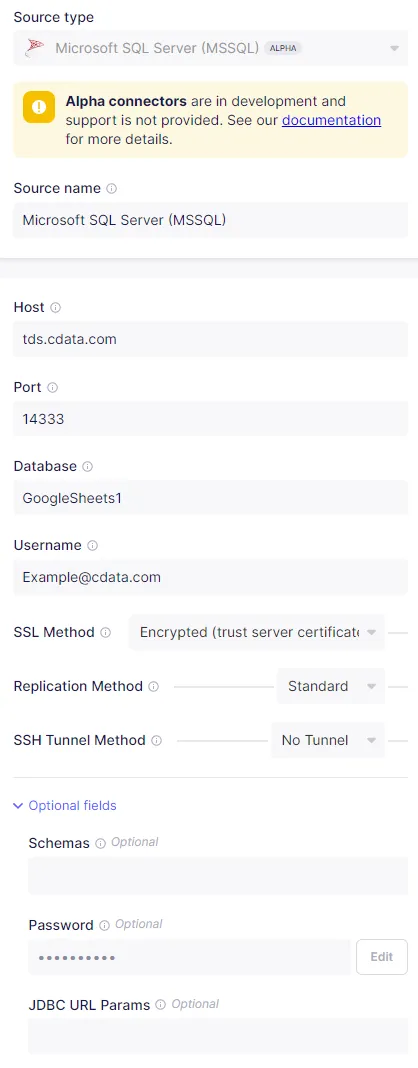

- Set Source Type to MSSQL Server to connect the TDS endpoint

- Set Source Name

- Set Host URL to tds.cdata.com

- Set Port to 14333

- Set Database to the name of the connection you previously configured, e.g. ADLS1.

- Set Username to your Connect Cloud username

- Set SSL Method to Encrypted (trust server certificate), leave the Replication Method as standard, and set SSH Tunnel Method to No Tunnel

- (Optional) Set Schema to anything you want to apply to the source

- Set Password to your Connect Cloud PAT

- (Optional) Enter any needed JBDC URL Params

- Click Test and Save to create the data source.

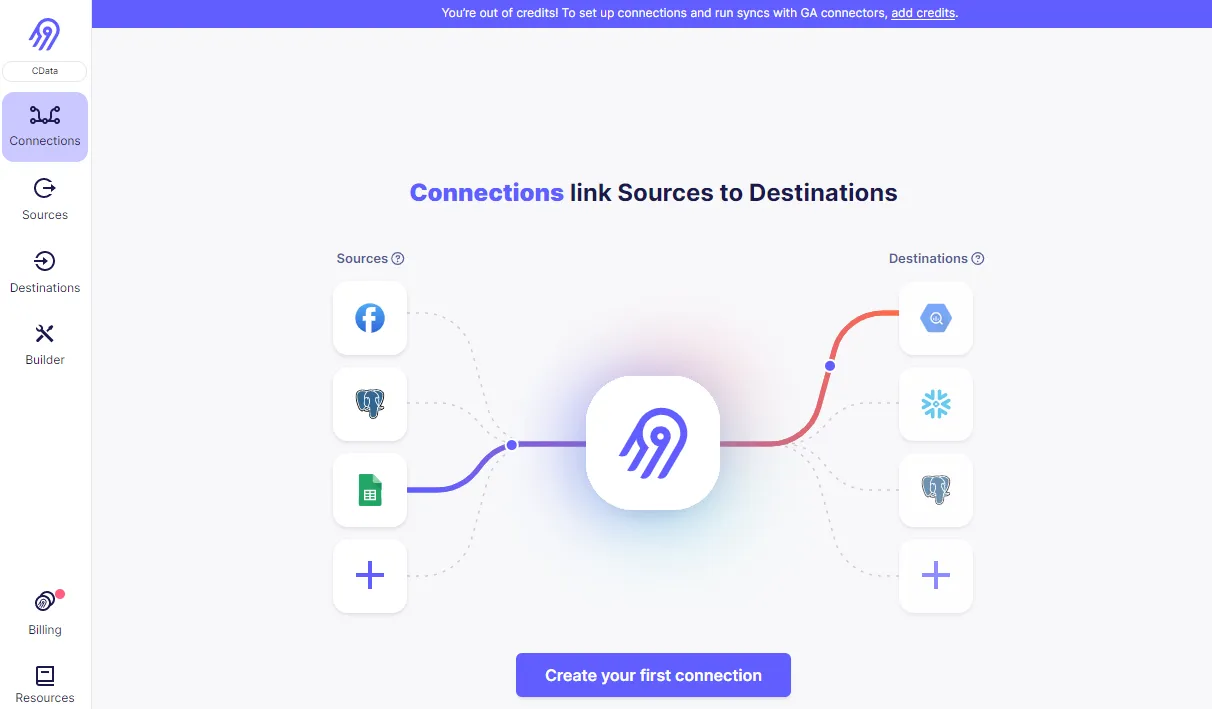

Create ELT Pipelines for Azure Data Lake Storage Data

To connect Azure Data Lake Storage data with a new destination, click Connections and then Set Up Connection to connect to your destination. Select the source created above and your desired destination, then allow Airbyte to process. When it is done, your connection is ready for use.

Get CData Connect Cloud

To get live data access to 100+ SaaS, Big Data, and NoSQL sources directly from Airbyte, try CData Connect Cloud today!