Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Search Azure Data Lake Storage External Objects in Salesforce Connect (API Server)

Use the API Server to securely provide OData feeds of Azure Data Lake Storage data to smart devices and cloud-based applications. Use the API Server and Salesforce Connect to create Azure Data Lake Storage objects that you can access from apps and the dashboard.

The CData API Server enables you to access Azure Data Lake Storage data from cloud-based applications like the Salesforce console and mobile applications like the Salesforce1 Mobile App. In this article, you will use the API Server and Salesforce Connect to access Azure Data Lake Storage external objects alongside standard Salesforce objects.

Set Up the API Server

If you have not already done so, download the CData API Server. Once you have installed the API Server, follow the steps below to begin producing secure Azure Data Lake Storage OData services:

Connect to Azure Data Lake Storage

To work with Azure Data Lake Storage data from Salesforce Connect, we start by creating and configuring a Azure Data Lake Storage connection. Follow the steps below to configure the API Server to connect to Azure Data Lake Storage data:

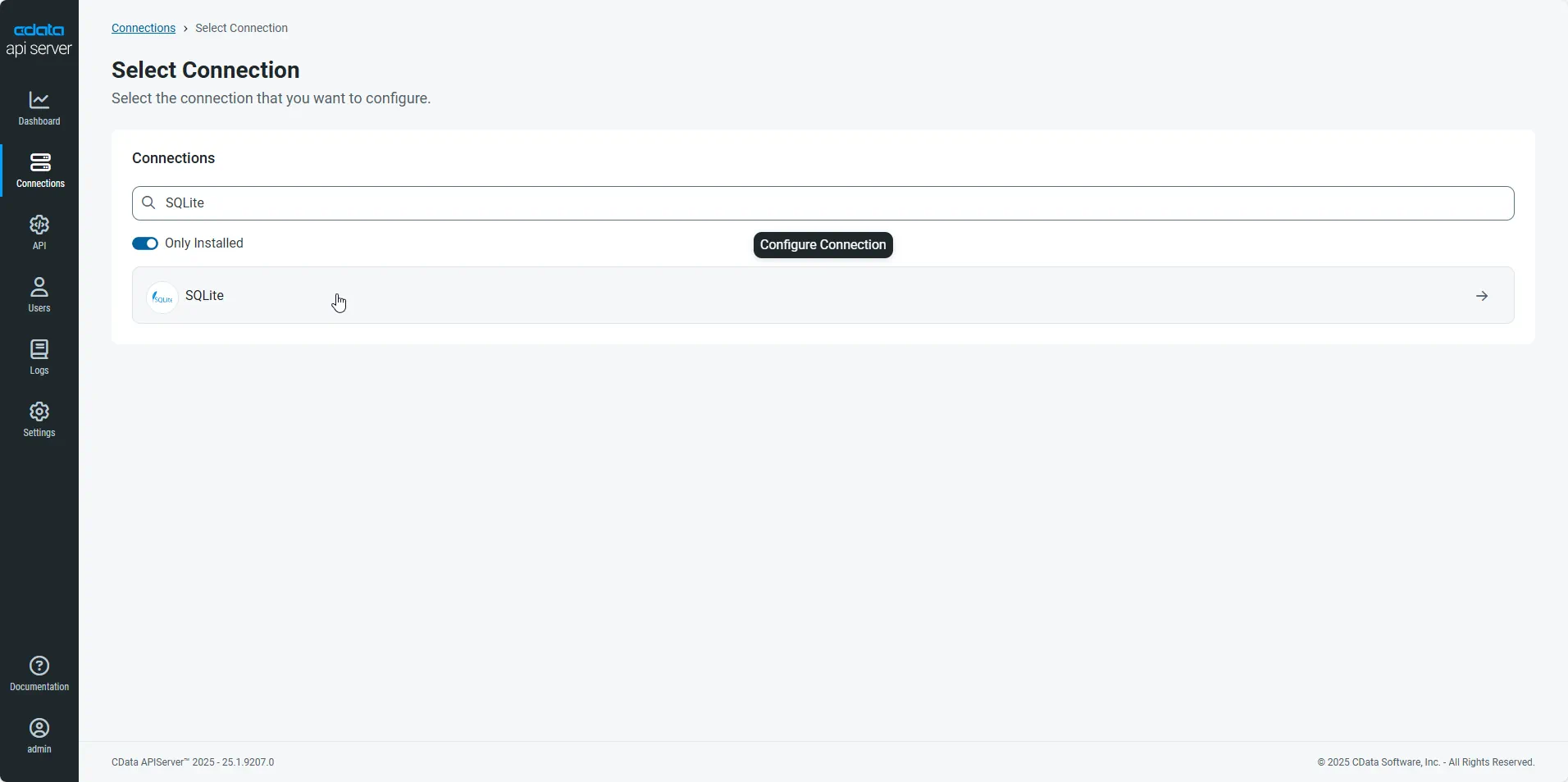

- First, navigate to the Connections page.

-

Click Add Connection and then search for and select the Azure Data Lake Storage connection.

-

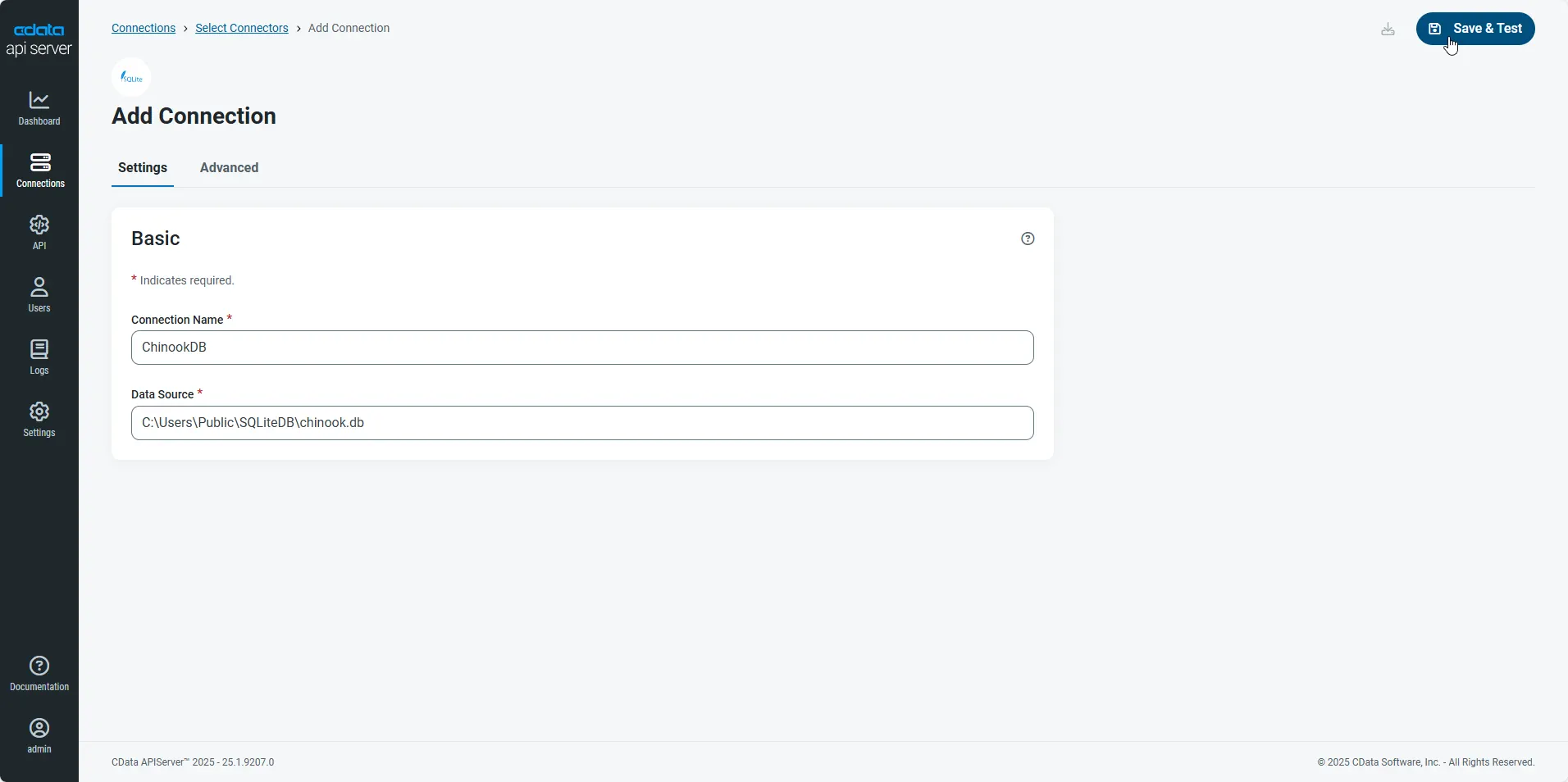

Enter the necessary authentication properties to connect to Azure Data Lake Storage.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Entra ID (formerly Azure AD) for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

- After configuring the connection, click Save & Test to confirm a successful connection.

Configure API Server Users

Next, create a user to access your Azure Data Lake Storage data through the API Server. You can add and configure users on the Users page. Follow the steps below to configure and create a user:

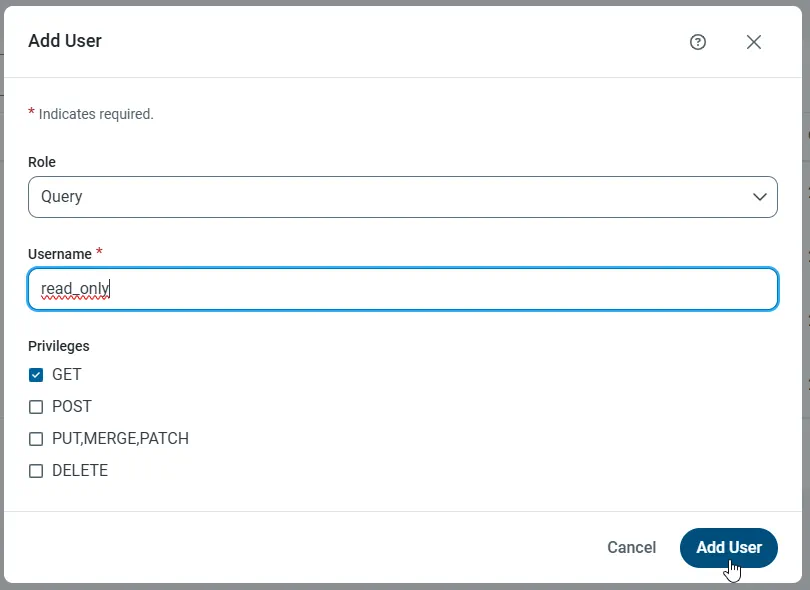

- On the Users page, click Add User to open the Add User dialog.

-

Next, set the Role, Username, and Privileges properties and then click Add User.

-

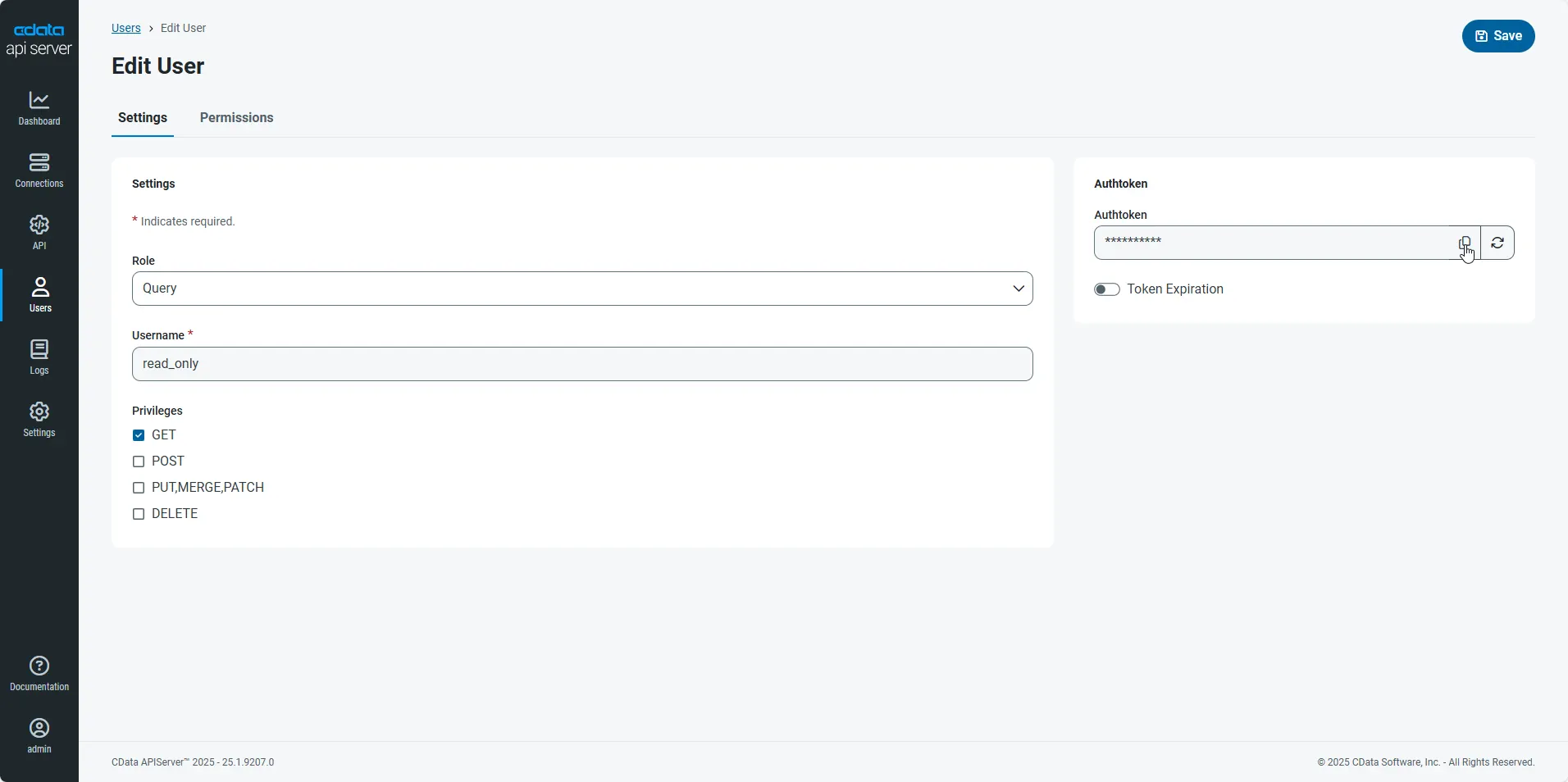

An Authtoken is then generated for the user. You can find the Authtoken and other information for each user on the Users page:

Creating API Endpoints for Azure Data Lake Storage

Having created a user, you are ready to create API endpoints for the Azure Data Lake Storage tables:

-

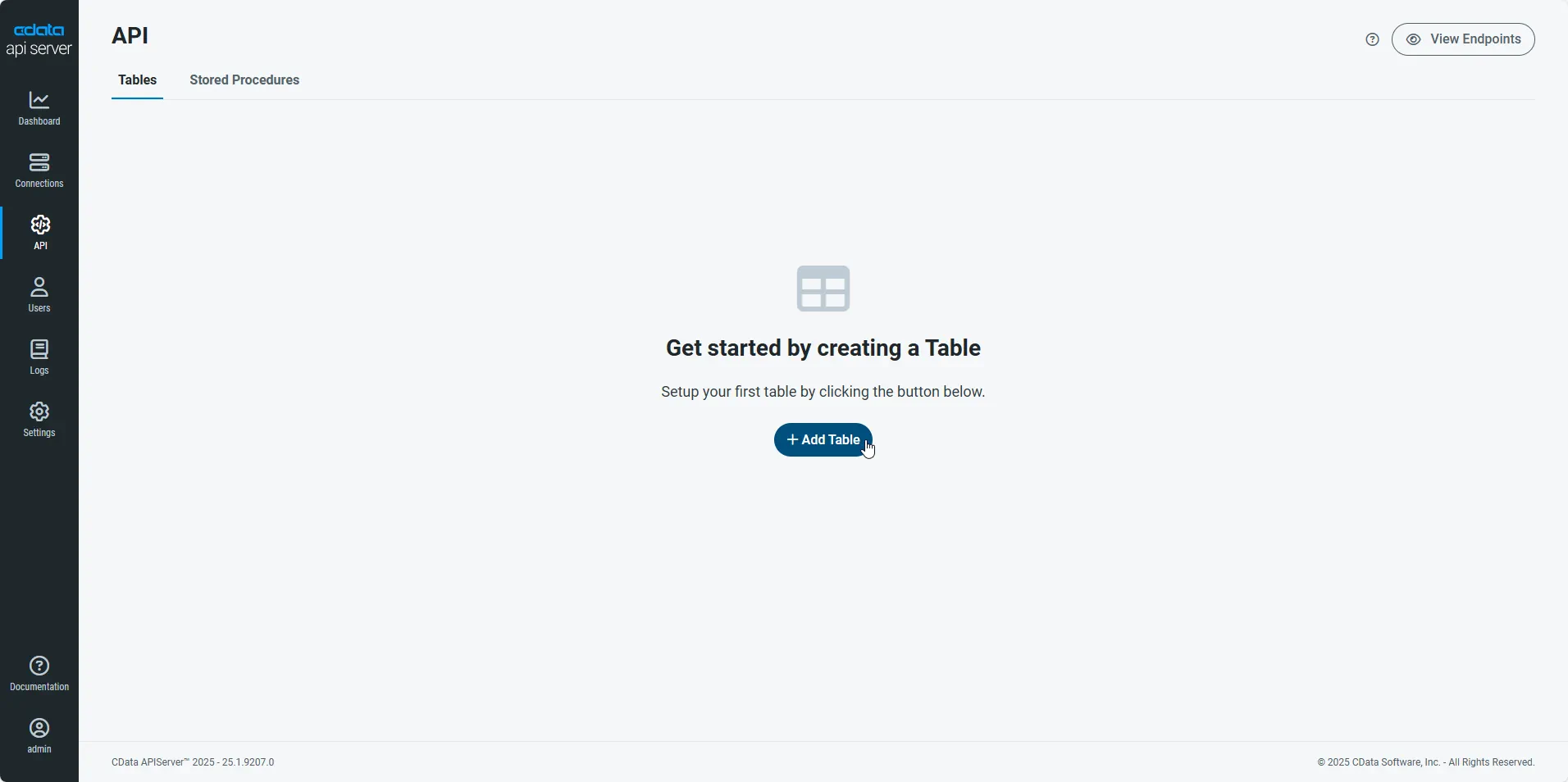

First, navigate to the API page and then click

Add Table

.

-

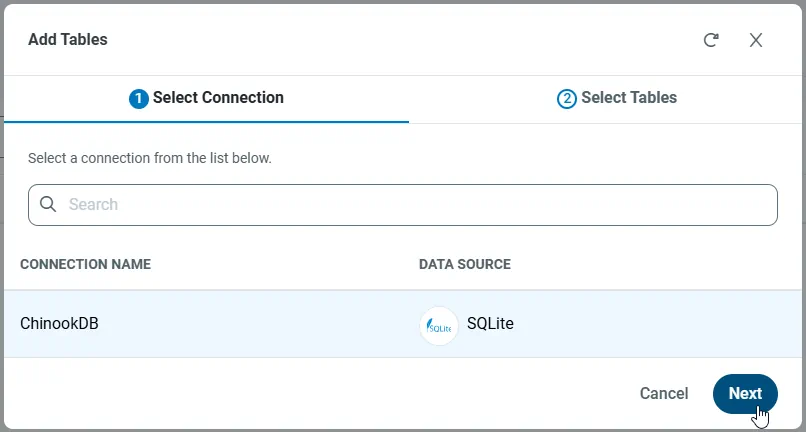

Select the connection you wish to access and click Next.

-

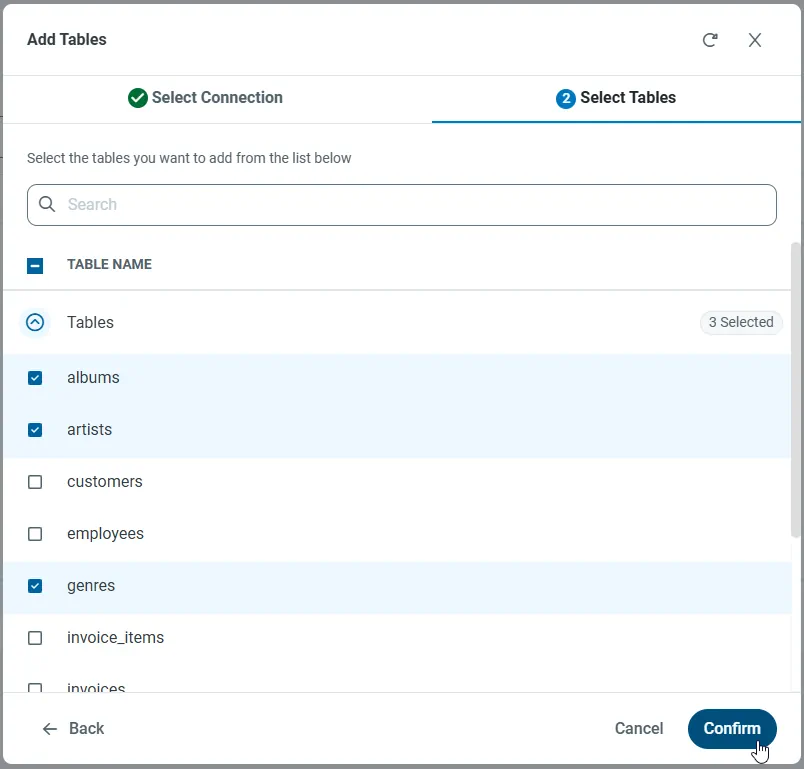

With the connection selected, create endpoints by selecting each table and then clicking Confirm.

Gather the OData Url

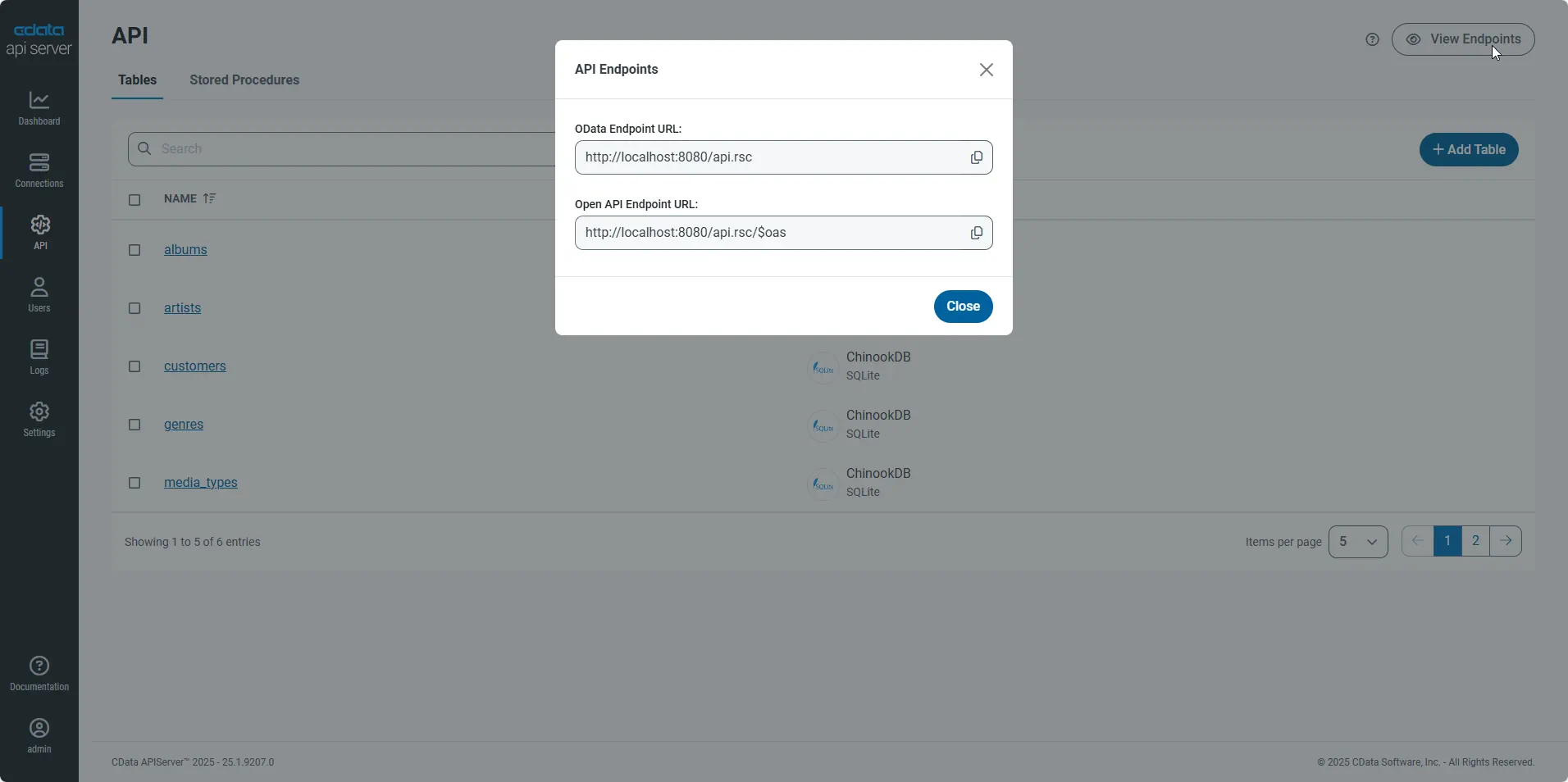

Having configured a connection to Azure Data Lake Storage data, created a user, and added resources to the API Server, you now have an easily accessible REST API based on the OData protocol for those resources. From the API page in API Server, you can view and copy the API Endpoints for the API:

Connect to Azure Data Lake Storage Data as an External Data Source

Follow the steps below to connect to the feed produced by the API Server.

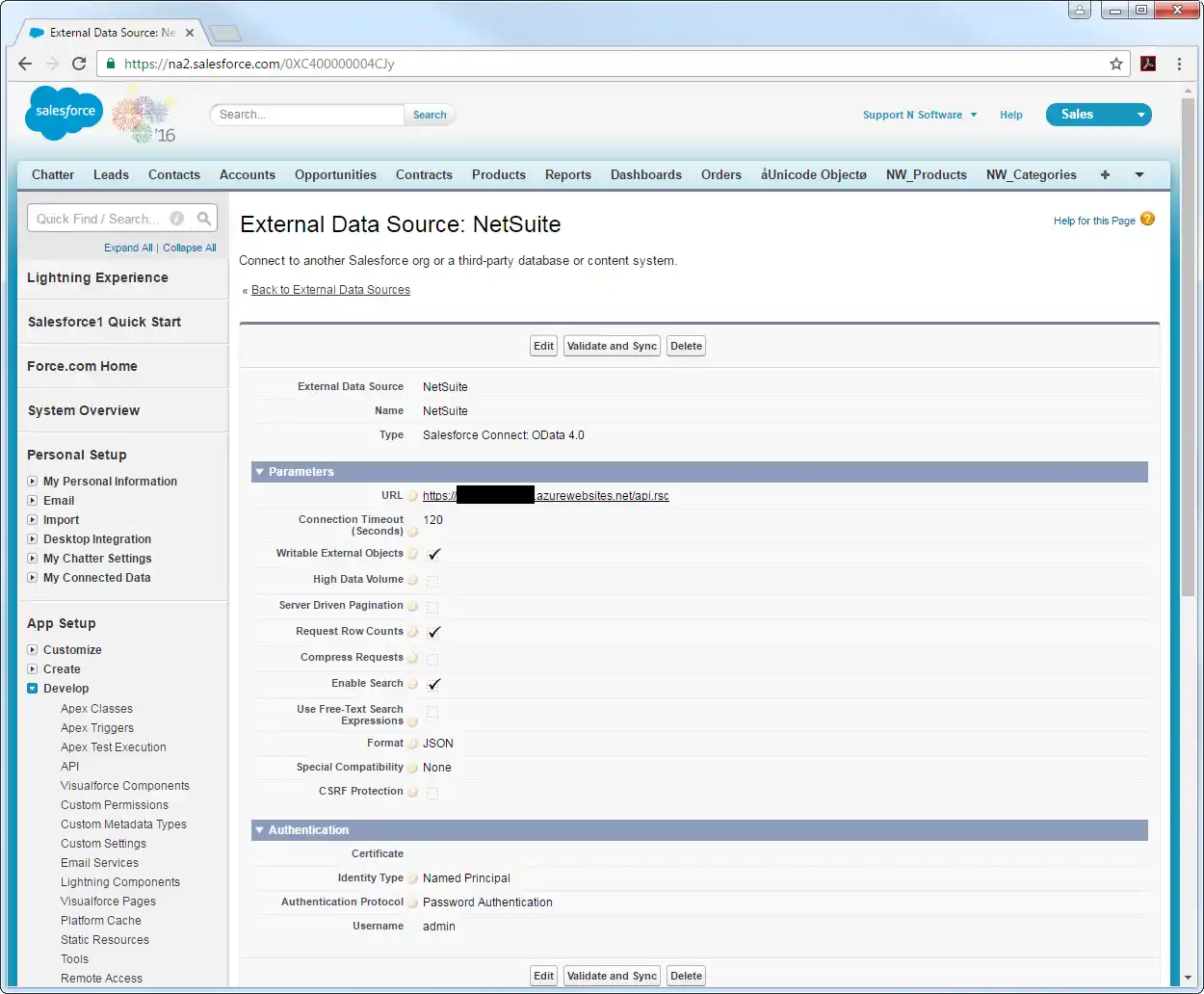

- Log into Salesforce and click Setup -> Develop -> External Data Sources.

- Click New External Data Source.

- Enter values for the following properties:

- External Data Source: Enter a label to be used in list views and reports.

- Name: Enter a unique identifier.

- Type: Select the option "Salesforce Connect: OData 4.0".

URL: Enter the URL to the OData endpoint of the API Server. The format of the OData URL is https://your-server:your-port/api.rsc.

Note that plain-text is suitable for only testing; for production, use TLS.

Select JSON in the Format menu.

- In the Authentication section, set the following properties:

- Identity Type: If all members of your organization will use the same credentials to access the API Server, select "Named Principal". If the members of your organization will connect with their own credentials, select "Per User".

- Authentication Protocol: Select Password Authentication to use basic authentication.

- Certificate: Enter or browse to the certificate to be used to encrypt and authenticate communications from Salesforce to your server.

- Username: Enter the username for a user known to the API Server.

- Password: Enter the user's authtoken.

Synchronize Azure Data Lake Storage Objects

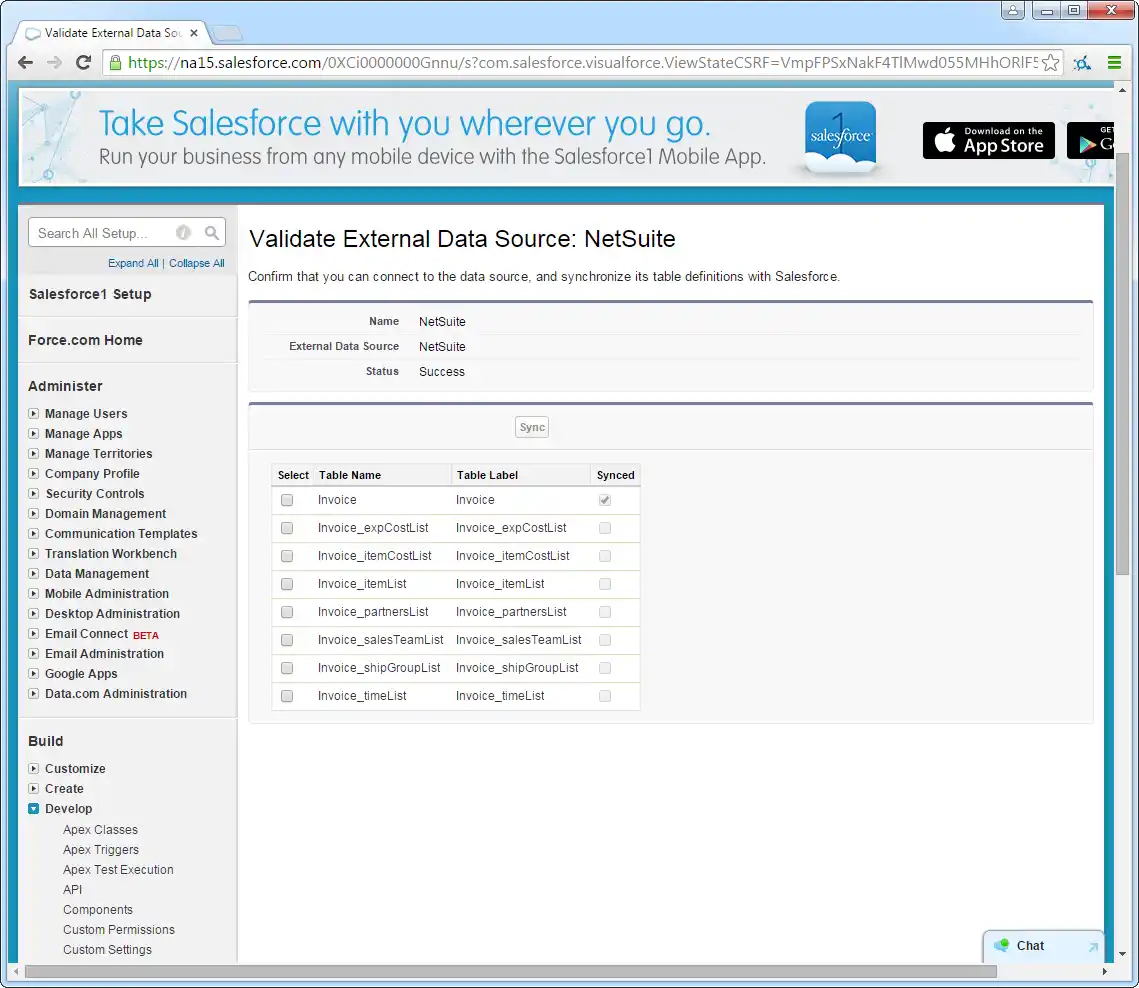

After you have created the external data source, follow the steps below to create Azure Data Lake Storage external objects that reflect any changes in the data source. You will synchronize the definitions for the Azure Data Lake Storage external objects with the definitions for Azure Data Lake Storage tables.

- Click the link for the external data source you created.

- Click Validate and Sync.

- Select the Azure Data Lake Storage tables you want to work with as external objects.

Access Azure Data Lake Storage Data as Salesforce Objects

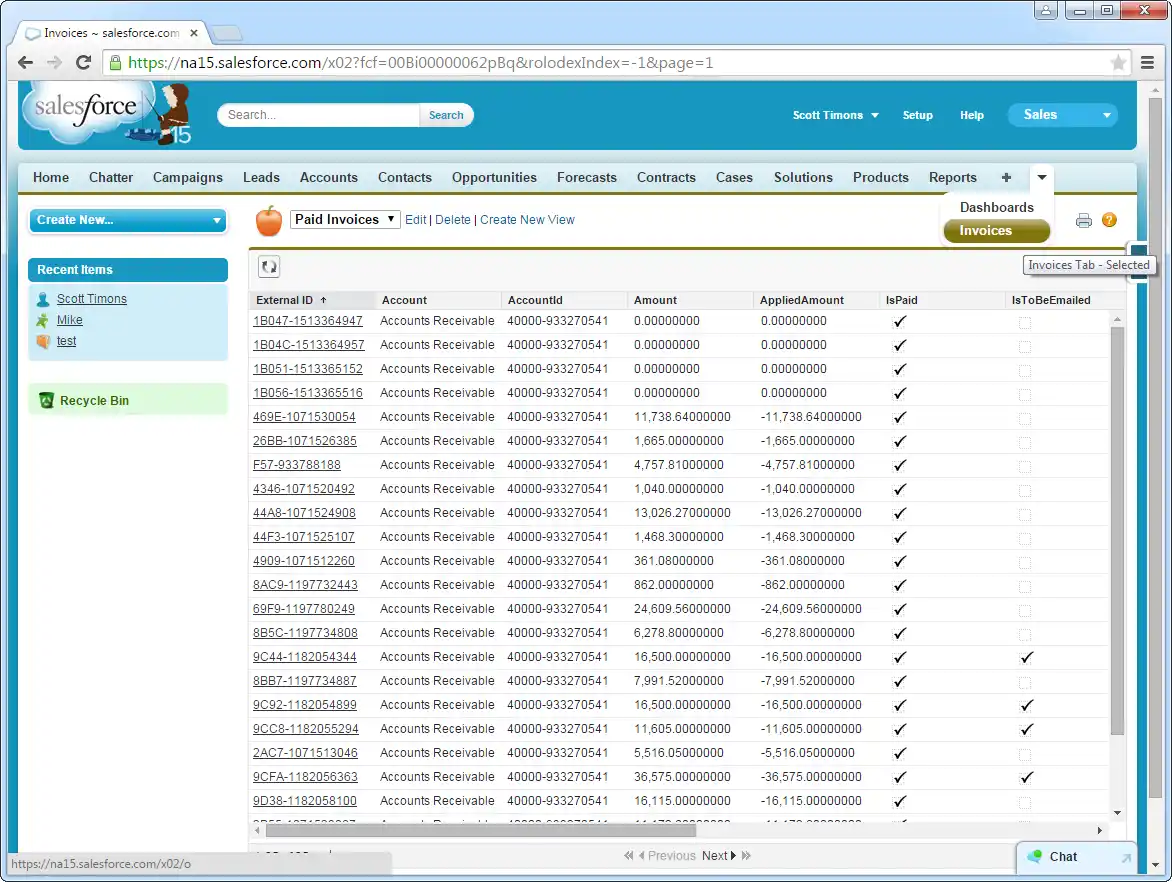

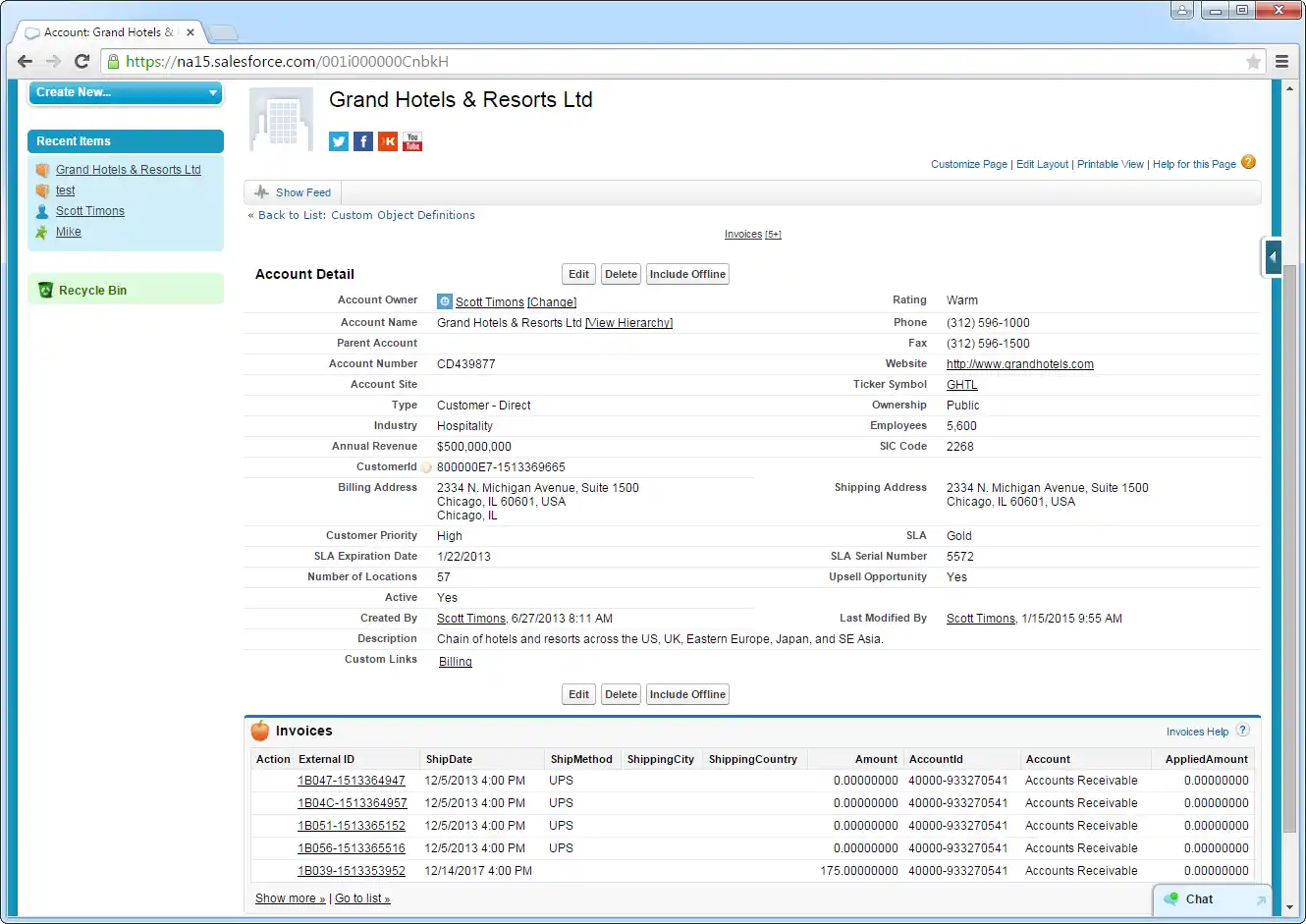

After adding Azure Data Lake Storage data as an external data source and syncing Azure Data Lake Storage tables with Azure Data Lake Storage external objects, you can use the external objects just as you would standard Salesforce objects.

-

Create a new tab with a filter list view:

-

Display related lists of Azure Data Lake Storage external objects alongside standard Salesforce objects:

Troubleshooting

You can use the following checklist to avoid typical connection problems:

- Ensure that your server has a publicly accessible IP address. Related to this check, but one layer up, at the operating system layer, you will also need to ensure that your firewall has an opening for the port the API Server is running on. At the application layer, ensure that you have added trusted IP addresses on the Settings -> Security tab of the administration console.

- Ensure that you are using a connection secured by an SSL certificate from a commercial, trusted CA. Salesforce does not currently accept self-signed certificates or internal CAs.

Ensure that the server you are hosting the API Server on is using TLS 1.1 or above. If you are using the .NET API Server, you can accomplish this by using the .NET API Server's embedded server.

If you are using IIS, TLS 1.1 and 1.2 are supported but not enabled by default. To enable these protocols, refer to the how-to on MSDN and the Microsoft technical reference.

If you are using the Java edition, note that TLS 1.2 is enabled by default in Java 8 but not in Java 6 or 7. If you are using these earlier versions, you can refer to this this Oracle how-to.