Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Automated Continuous Azure Data Lake Storage Replication to Apache Kafka

Use CData Sync for automated, continuous, customizable Azure Data Lake Storage replication to Apache Kafka.

Always-on applications rely on automatic failover capabilities and real-time data access. CData Sync integrates live Azure Data Lake Storage data into your Apache Kafka instance, allowing you to consolidate all of your data into a single location for archiving, reporting, analytics, machine learning, artificial intelligence and more.

Configure Apache Kafka as a Replication Destination

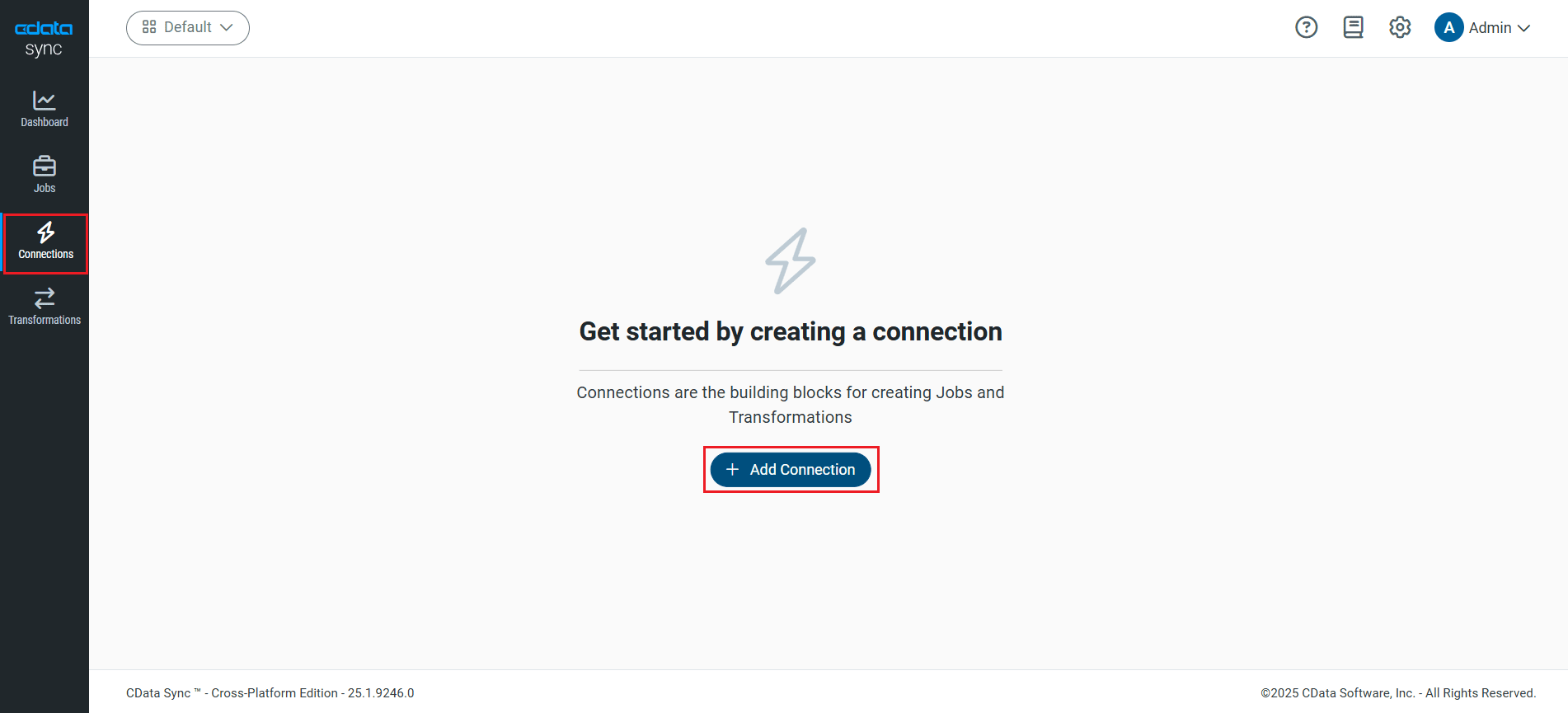

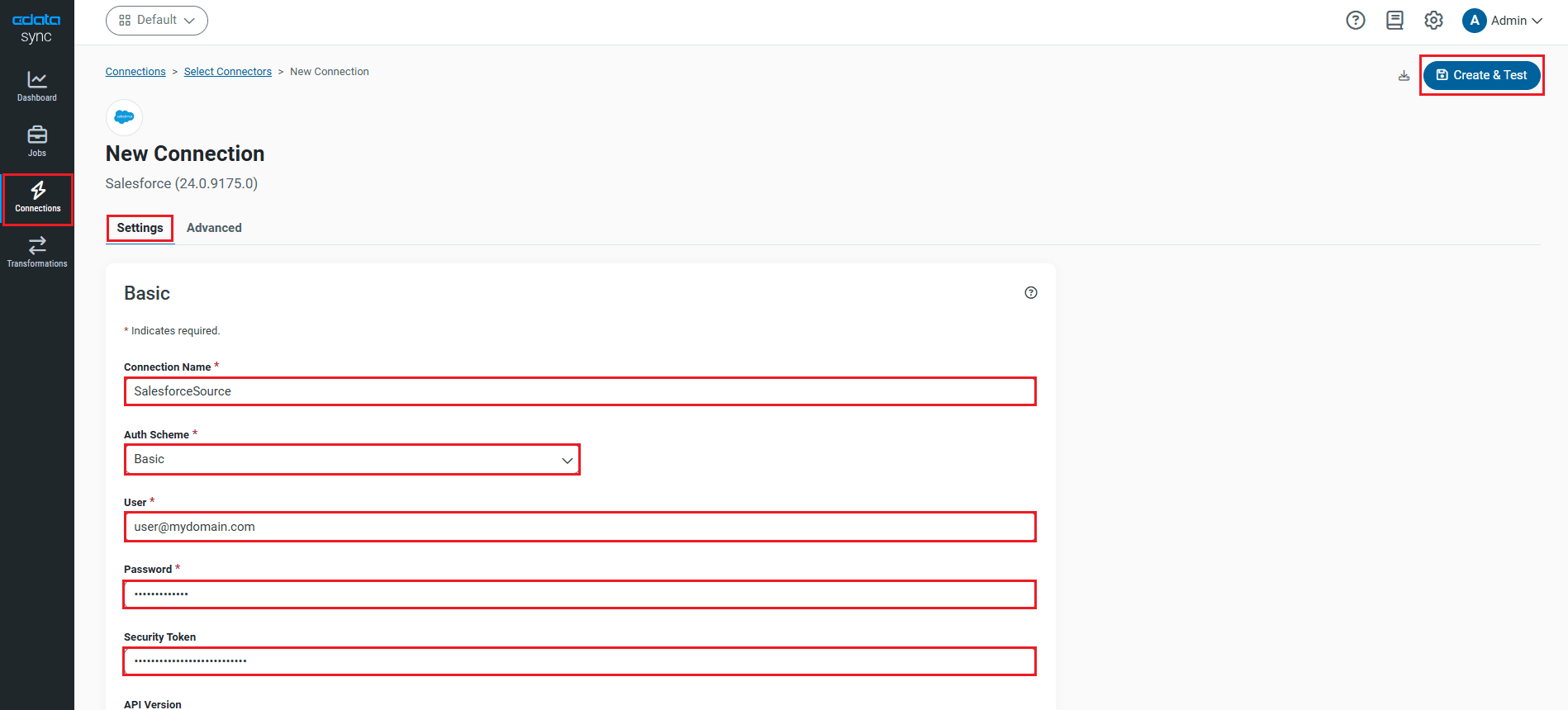

Using CData Sync, you can replicate Azure Data Lake Storage data to Apache Kafka. To add a replication destination, navigate to the Connections tab.

- Click Add Connection.

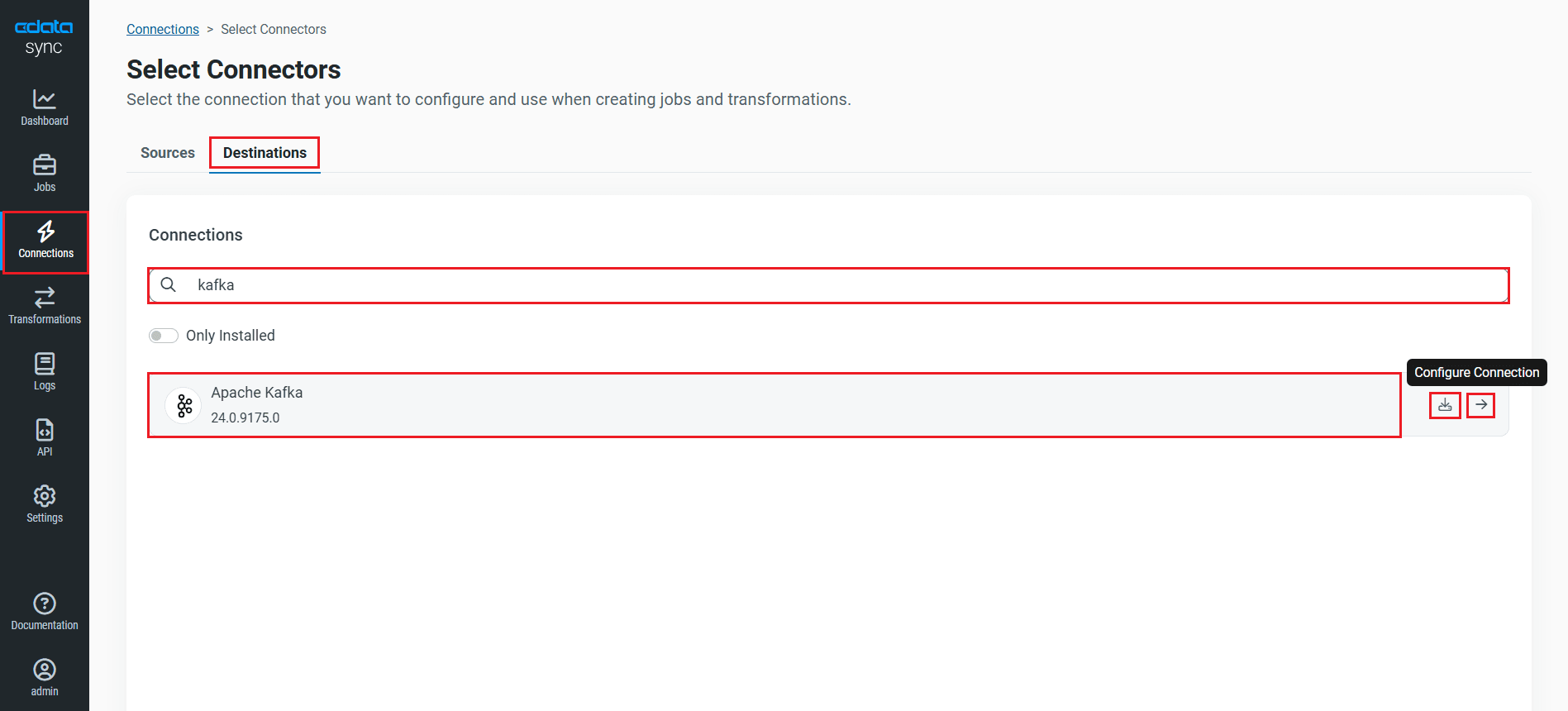

- Select the Destinations tab and locate the Apache Kafka connector.

- Click the Configure Connection icon at the end of that row to open the New Connection page. If the Configure Connection icon is not available, click the Download Connector icon to install the Apache Kafka connector. For more information about installing new connectors, see Connections in the Help documentation.

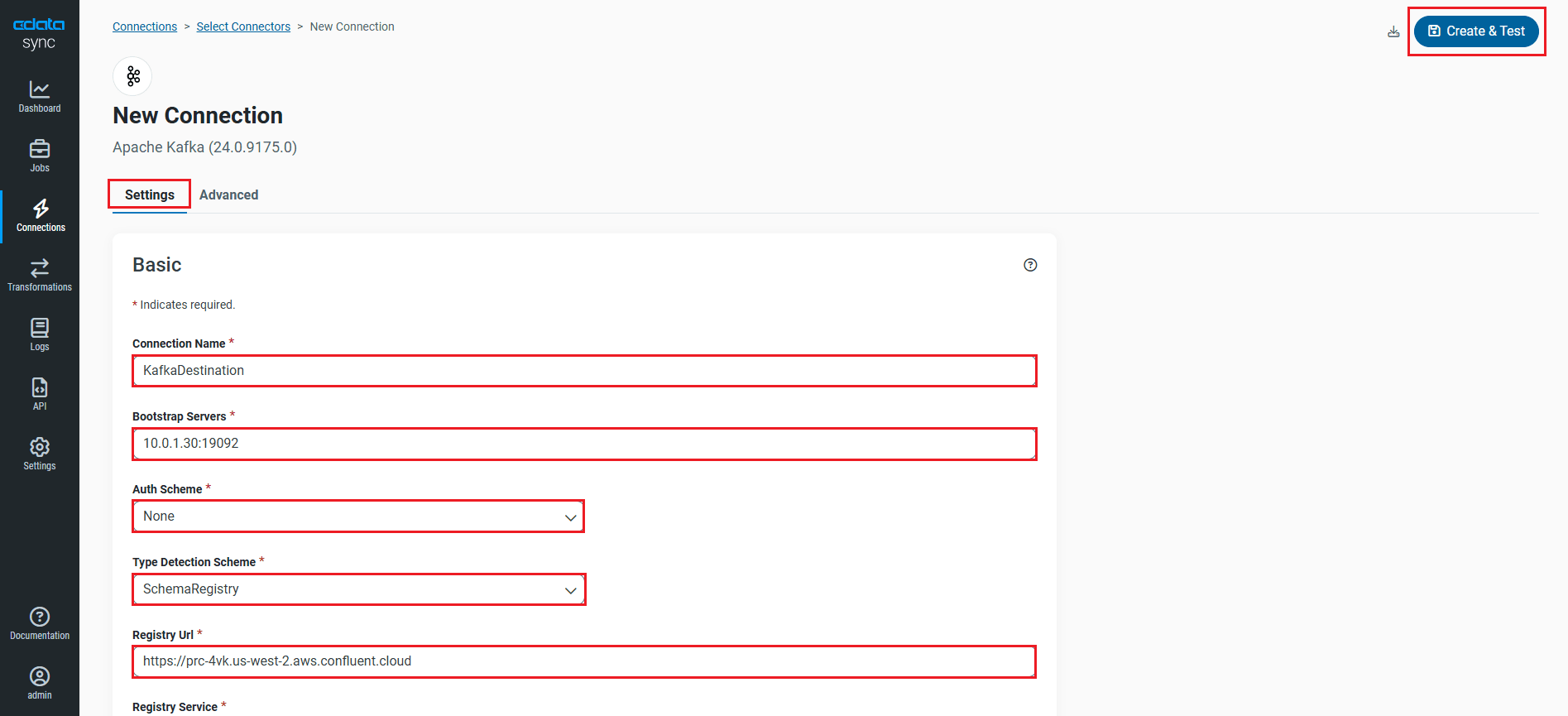

- To connect to Apache Kafka, set the following connection properties:

- Connection Name: Enter a connection name of your choice for the Apache Kafka connection.

- Bootstrap Servers: Enter the address of the Apache Kafka Bootstrap servers to which you want to connect.

- Auth Scheme: Select the authentication scheme. Plain is the default setting. For this setting, specify your login credentials:

- User: Enter the username that you use to authenticate to Apache Kafka.

- Password: Enter the password that you use to authenticate to Apache Kafka.

- Type Detection Scheme: Specify the detection-scheme type (None, RowScan, SchemaRegistry, or MessageOnly) that you want to use. The default type is None.

- Registry Url: Enter the URL to the server for the schema registry.

- Registry Service: Select the Schema Registry service that you want to use for working with topic schemas.

- Registry Auth Scheme: Select the scheme that you want to use to authenticate to the schema registry.

- Use SSL: Specify whether you want to use the Secure Sockets Layer (SSL) protocol. The default value is False.

- Once connected, click Create & Test to create, test and save the connection.

You are now connected to Apache Kafka and can use it as both a source and a destination.

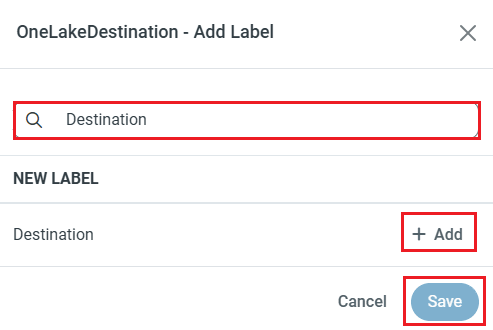

NOTE: You can use the Label feature to add a label for a source or a destination.

In this article, we will demonstrate how to load Azure Data Lake Storage data into Apache Kafka and utilize it as a destination.

Configure the Azure Data Lake Storage Connection

You can configure a connection to Azure Data Lake Storage from the Connections tab. To add a connection to your Azure Data Lake Storage account, navigate to the Connections tab.

- Click Add Connection.

- Select a source (Azure Data Lake Storage).

- Configure the connection properties.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Entra ID (formerly Azure AD) for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

- Click Connect to Azure Data Lake Storage to ensure that the connection is configured properly.

- Click Save & Test to save the changes.

Configure Replication Queries

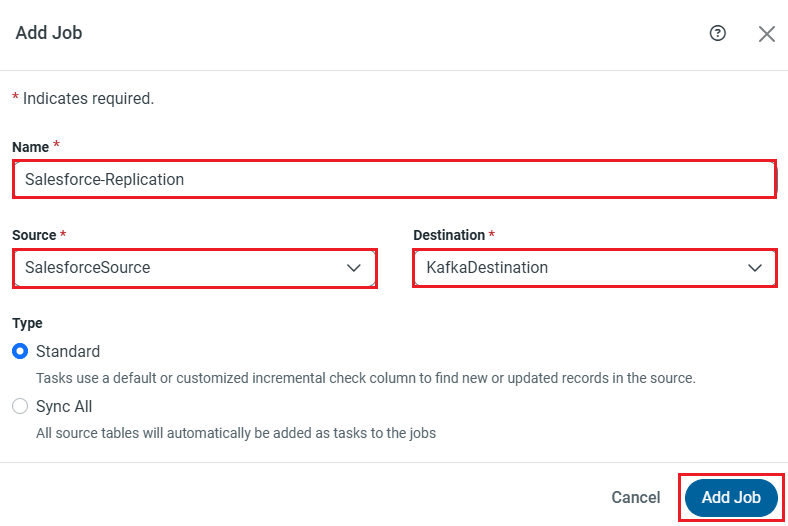

CData Sync enables you to control replication with a point-and-click interface and with SQL queries. For each replication you wish to configure, navigate to the Jobs tab and click Add Job. Select the Source and Destination for your replication.

Replicate Entire Tables

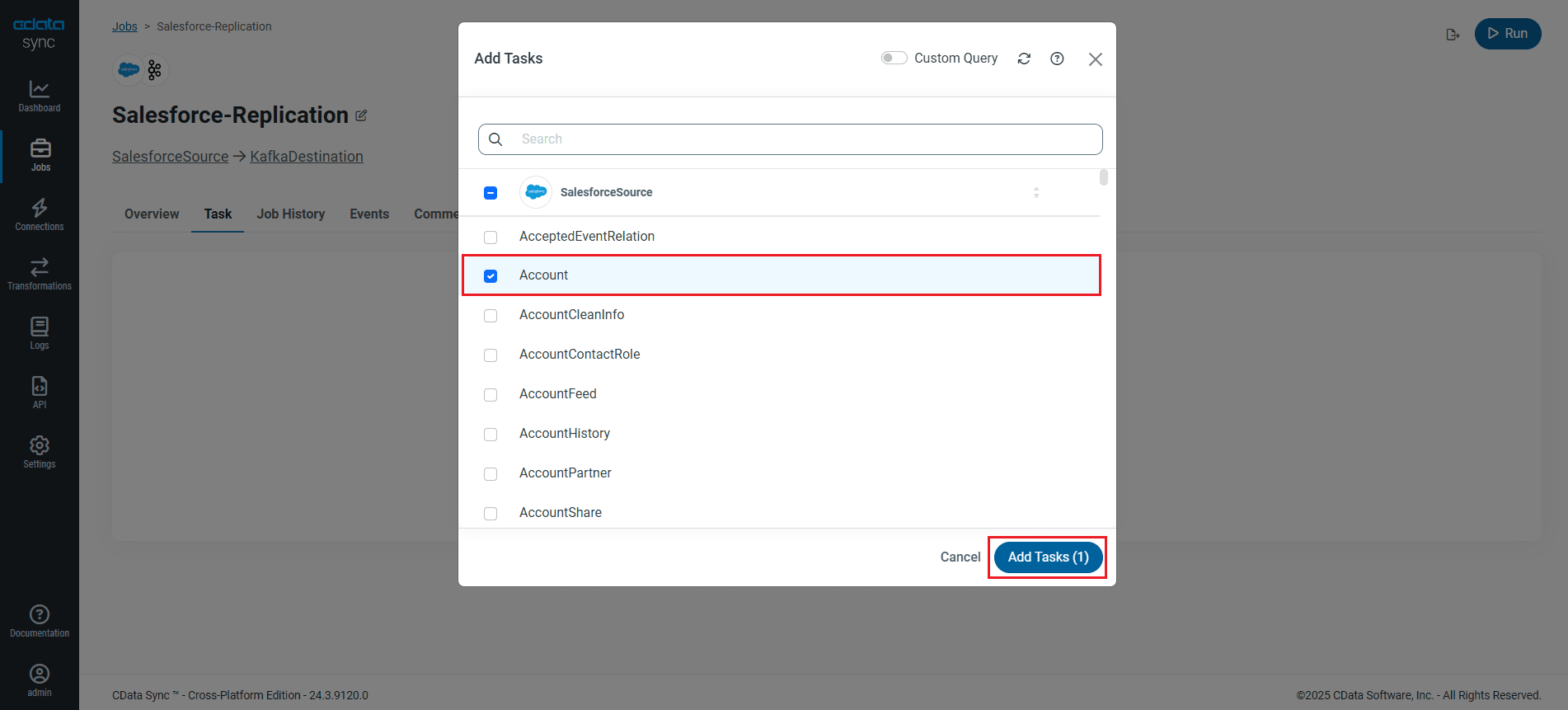

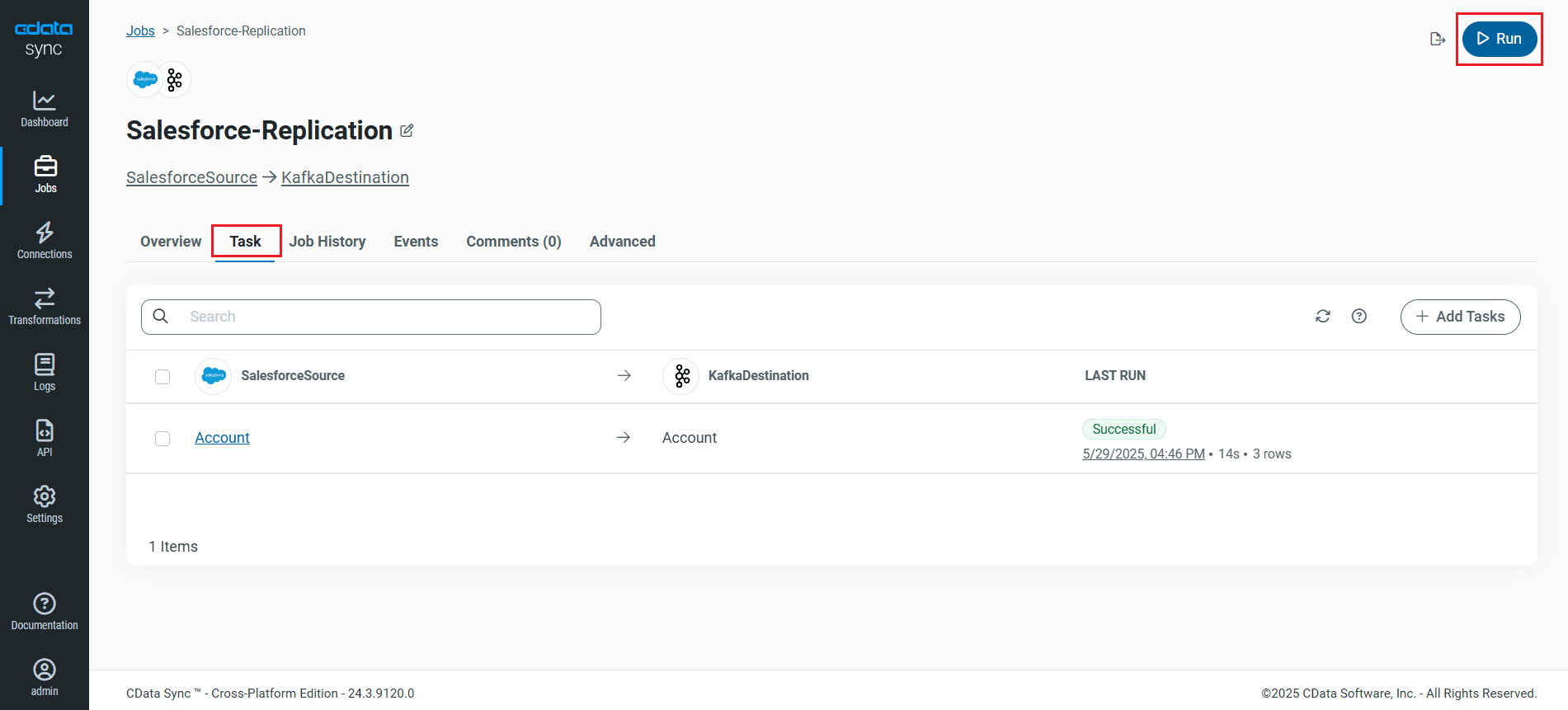

To replicate an entire table, navigate to the Task tab in the Job, click Add Tasks, choose the table(s) from the list of Azure Data Lake Storage tables you wish to replicate into Apache Kafka, and click Add Tasks again.

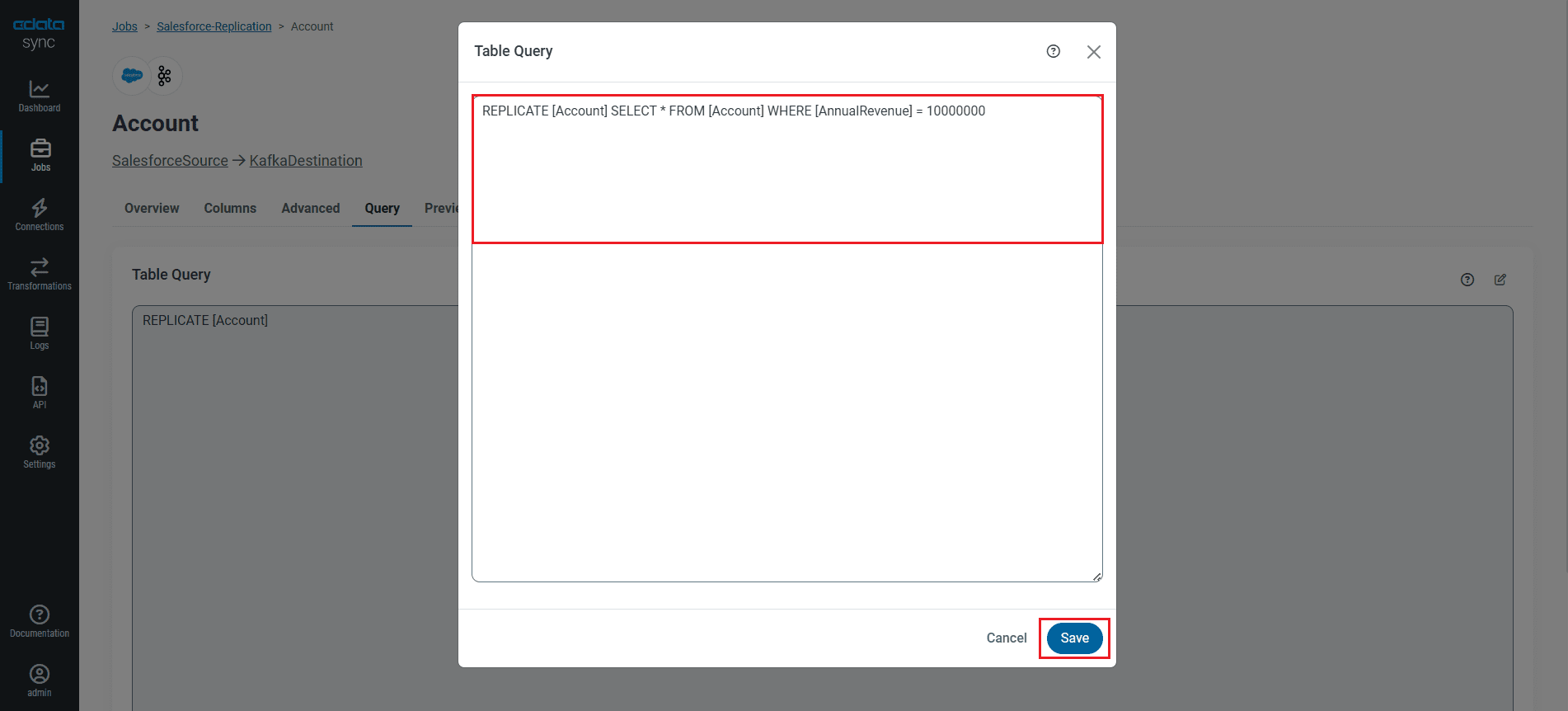

Customize Your Replication

You can use the Columns and Query tabs of a task to customize your replication. The Columns tab allows you to specify which columns to replicate, rename the columns at the destination, and even perform operations on the source data before replicating. The Query tab allows you to add filters, grouping, and sorting to the replication with the help of SQL queries.

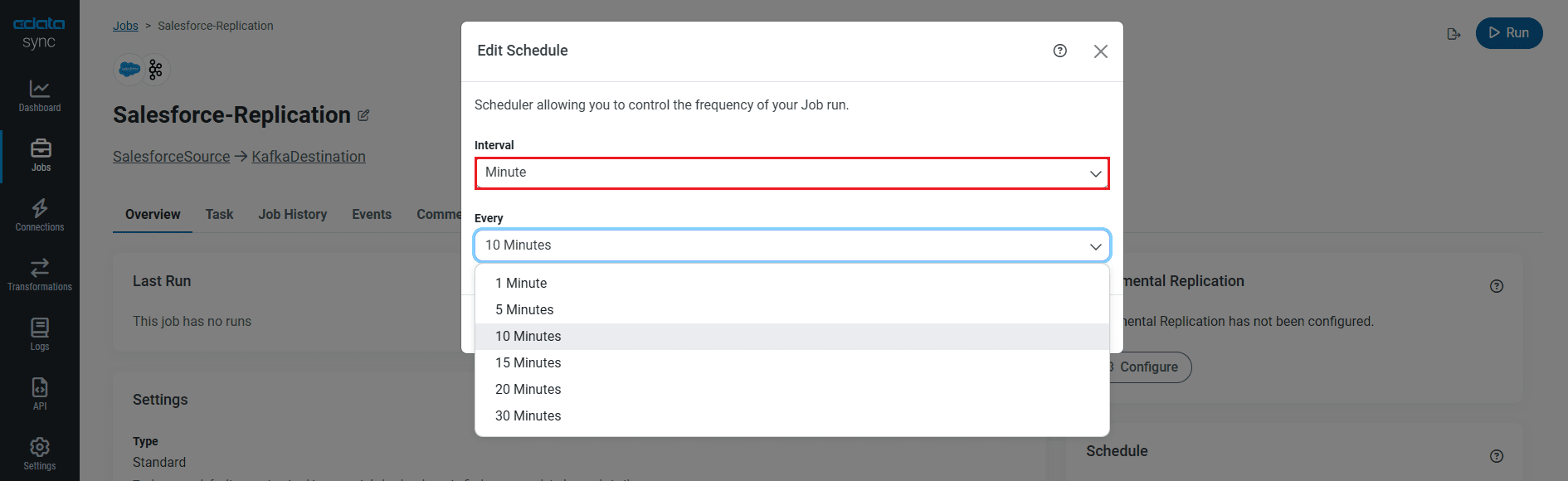

Schedule Your Replication

Select the Overview tab in the Job, and click Configure under Schedule. You can schedule a job to run automatically by configuring it to run at specified intervals, ranging from once every 10 minutes to once every month.

Once you have configured the replication job, click Save Changes. You can configure any number of jobs to manage the replication of your Azure Data Lake Storage data to Apache Kafka.

Run the Replication Job

Once all the required configurations are made for the job, select the Azure Data Lake Storage table you wish to replicate and click Run. After the replication completes successfully, a notification appears, showing the time taken to run the job and the number of rows replicated.

Free Trial & More Information

Now that you have seen how to replicate Azure Data Lake Storage data into Apache Kafka, visit our CData Sync page to explore more about CData Sync and download a free 30-day trial. Start consolidating your enterprise data today!

As always, our world-class Support Team is ready to answer any questions you may have.