Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Consume Databricks OData Feeds in SAP Lumira

Use the API Server to create data visualizations on Databricks feeds that reflect any changes in SAP Lumira.

You can use the CData API Server to create data visualizations based on Databricks data in SAP Lumira. The API Server enables connectivity to live data: dashboards and reports can be refreshed on demand. This article shows how to create a chart that is always up to date.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Set Up the API Server

If you have not already done so, download the CData API Server. Once you have installed the API Server, follow the steps below to begin producing secure Databricks OData services:

Connect to Databricks

To work with Databricks data from SAP Lumira, we start by creating and configuring a Databricks connection. Follow the steps below to configure the API Server to connect to Databricks data:

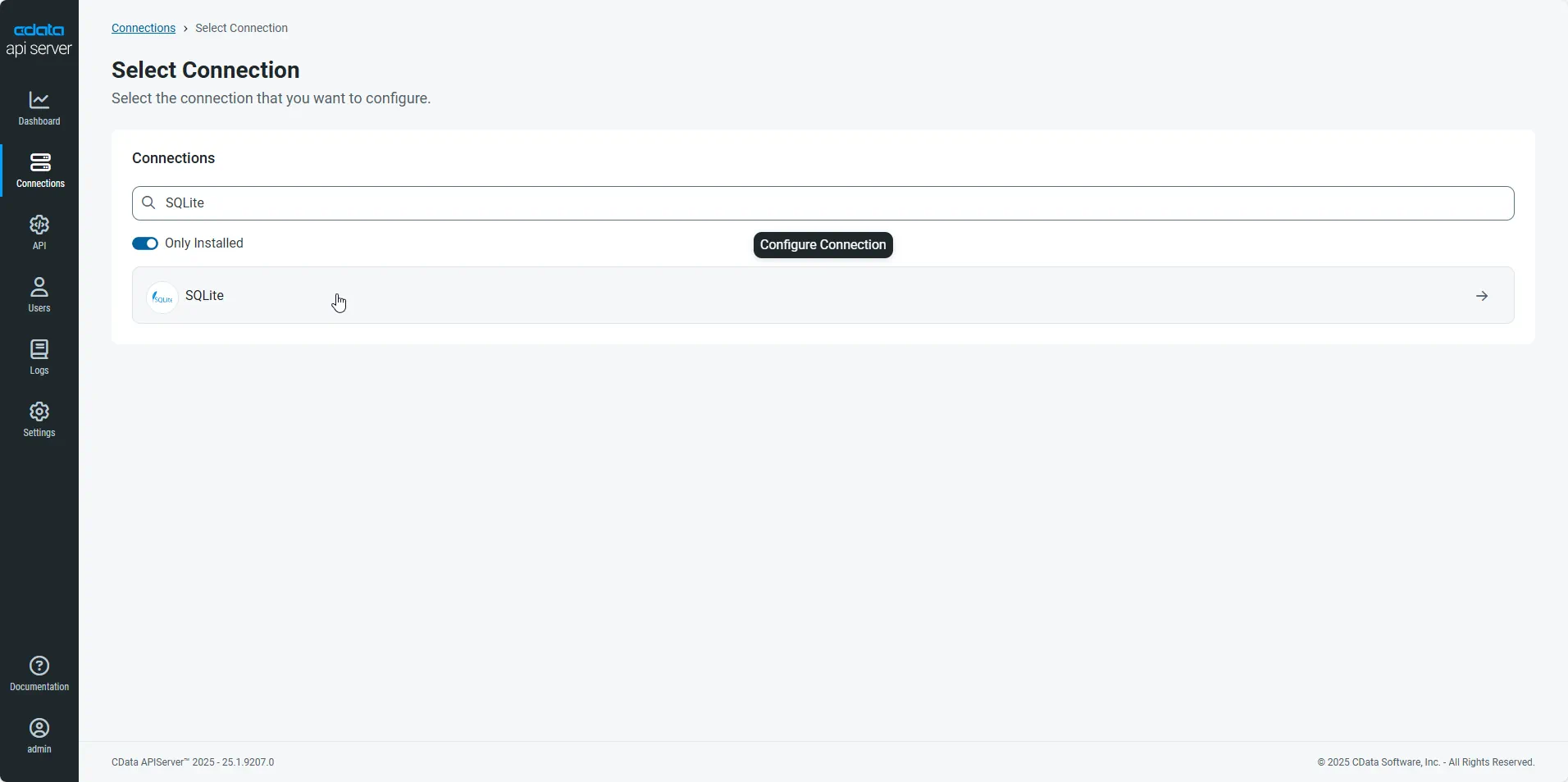

- First, navigate to the Connections page.

-

Click Add Connection and then search for and select the Databricks connection.

-

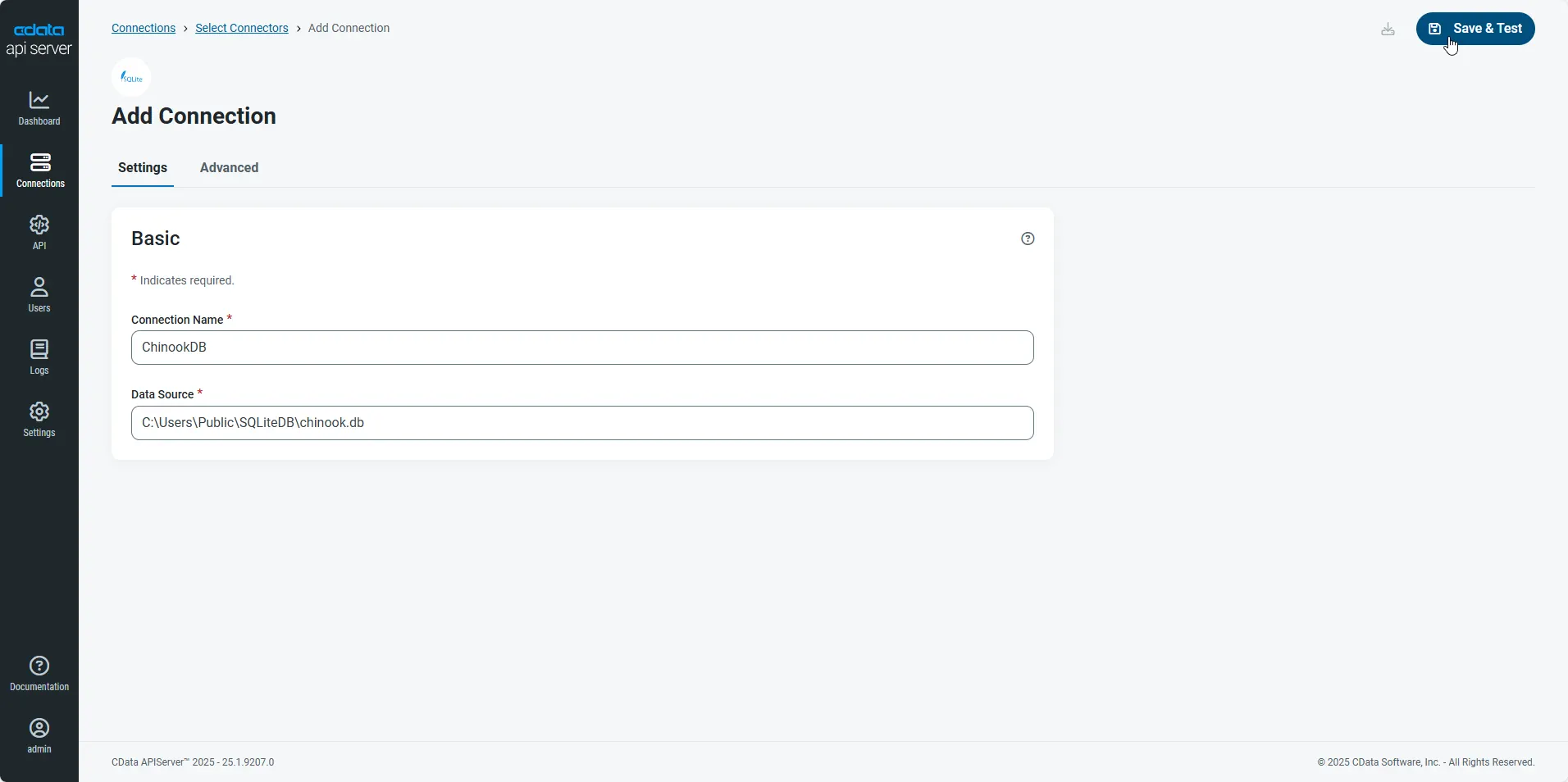

Enter the necessary authentication properties to connect to Databricks.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

- After configuring the connection, click Save & Test to confirm a successful connection.

Configure API Server Users

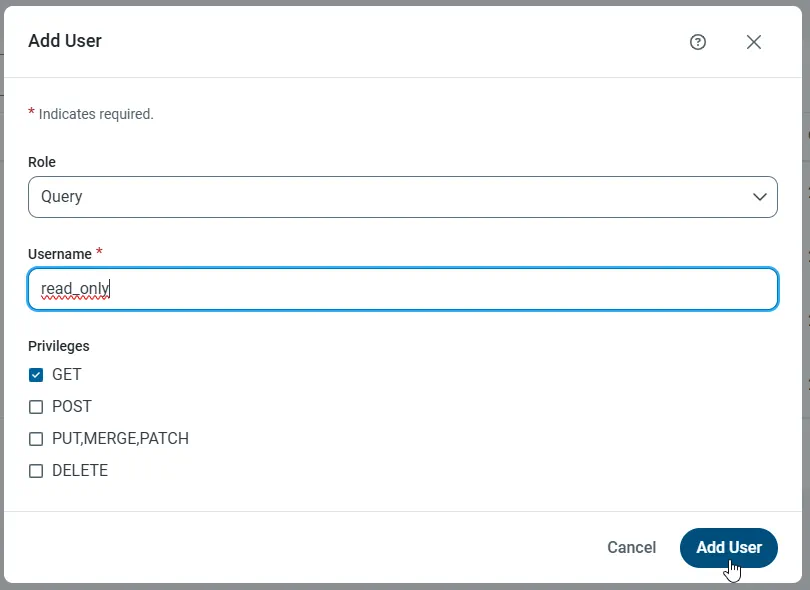

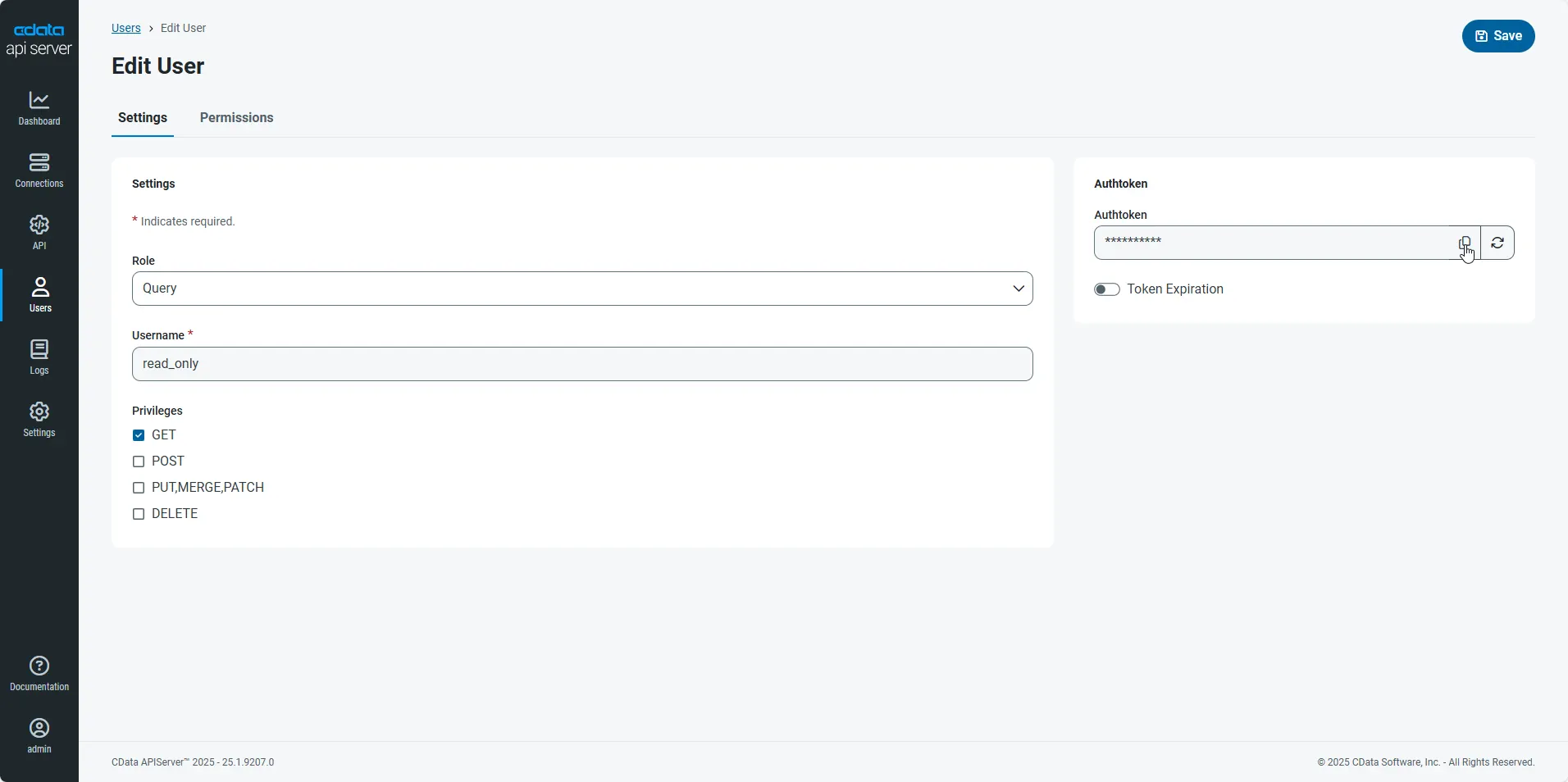

Next, create a user to access your Databricks data through the API Server. You can add and configure users on the Users page. Follow the steps below to configure and create a user:

- On the Users page, click Add User to open the Add User dialog.

-

Next, set the Role, Username, and Privileges properties and then click Add User.

-

An Authtoken is then generated for the user. You can find the Authtoken and other information for each user on the Users page:

Creating API Endpoints for Databricks

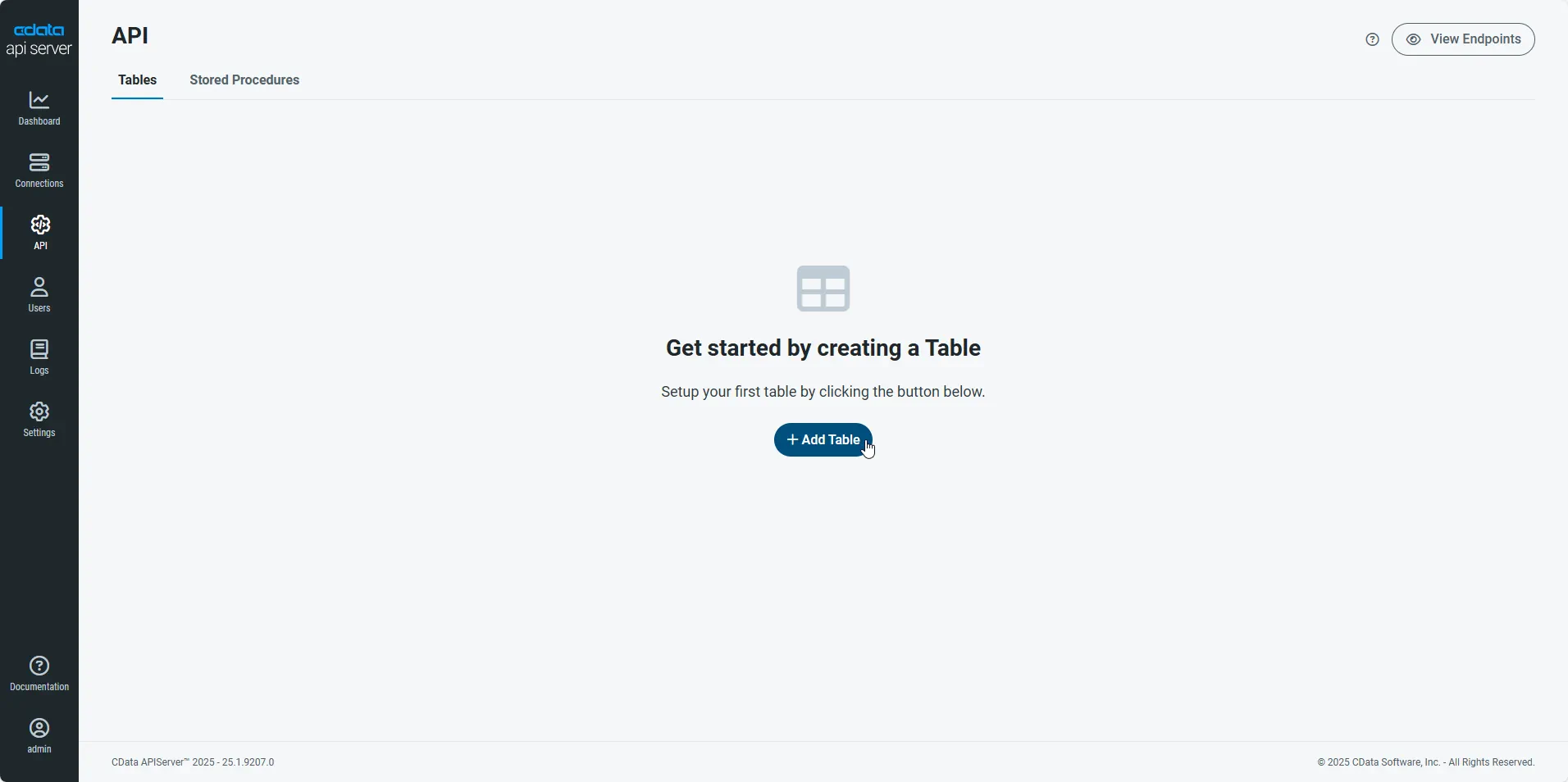

Having created a user, you are ready to create API endpoints for the Databricks tables:

-

First, navigate to the API page and then click

Add Table

.

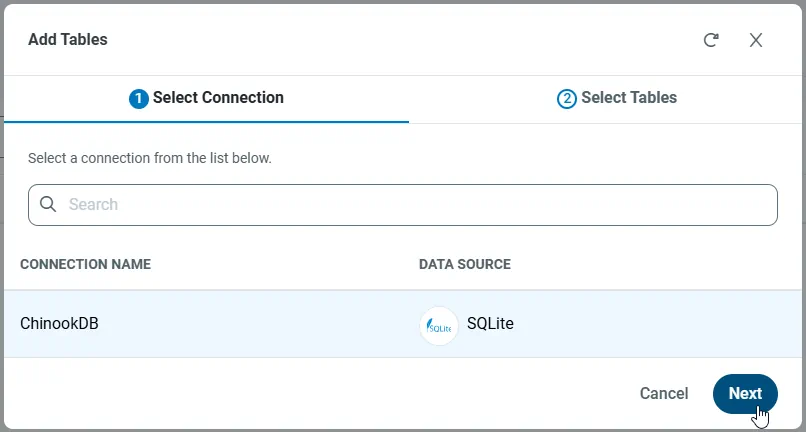

-

Select the connection you wish to access and click Next.

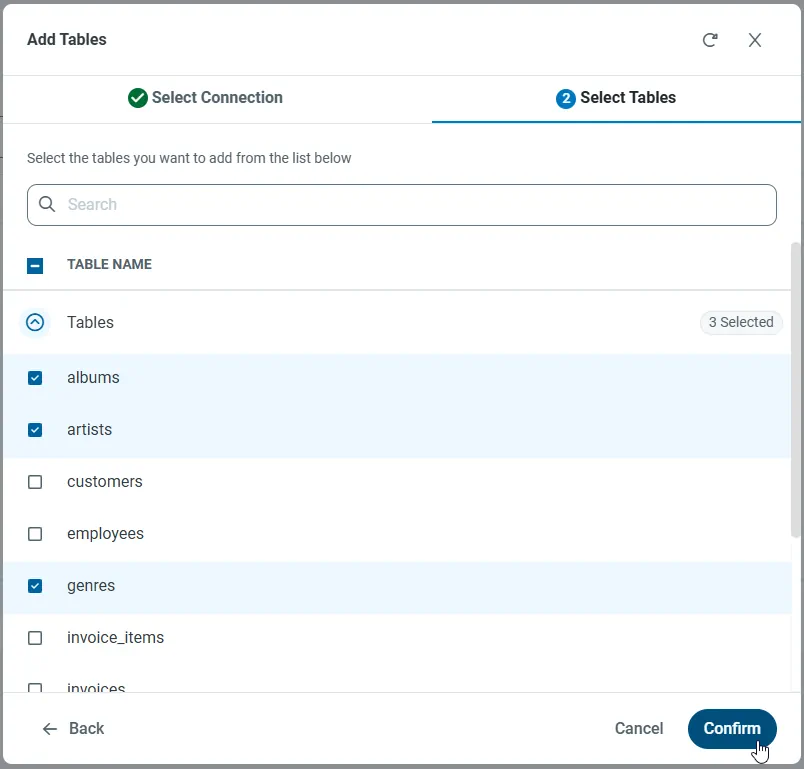

-

With the connection selected, create endpoints by selecting each table and then clicking Confirm.

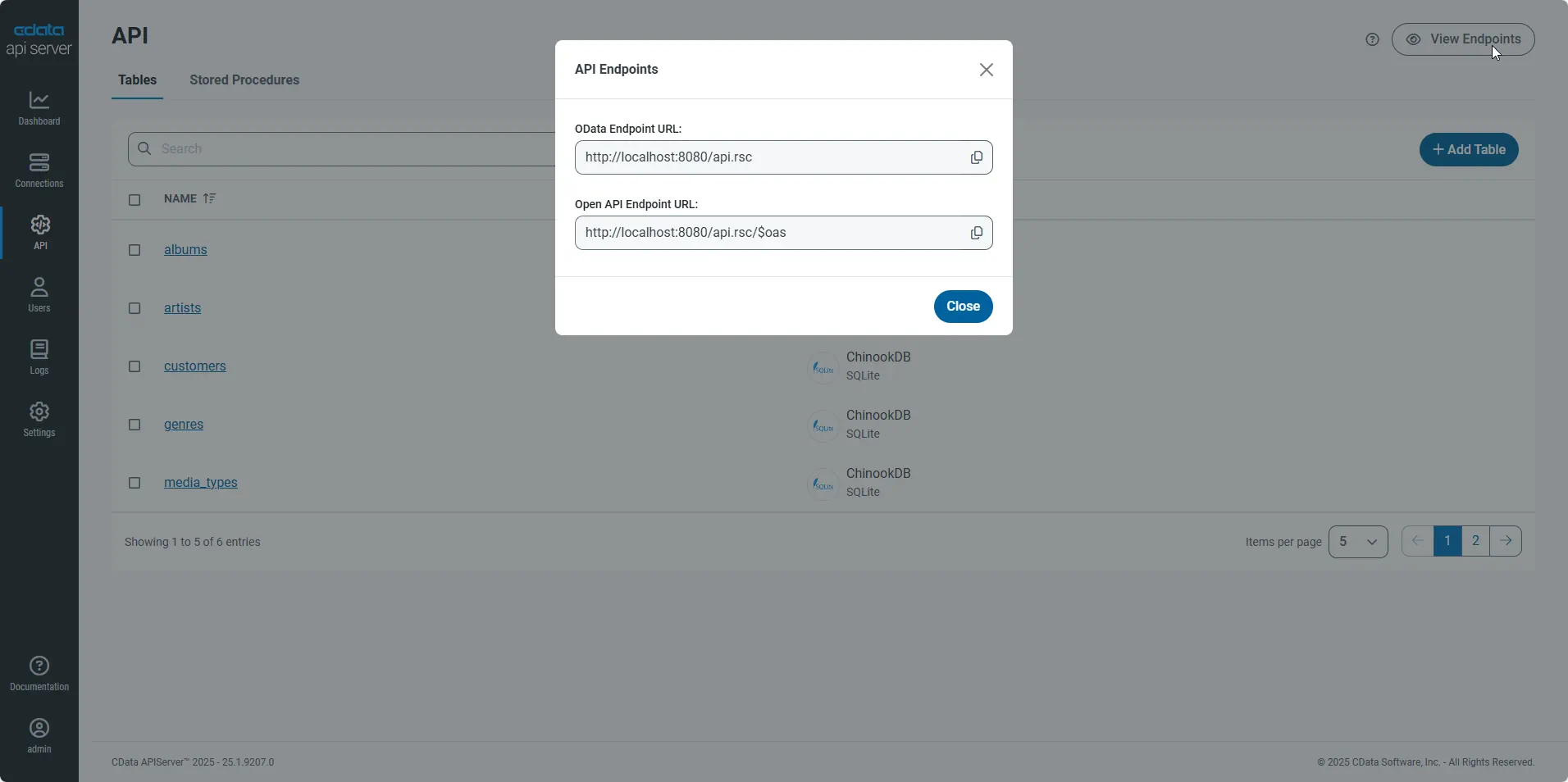

Gather the OData Url

Having configured a connection to Databricks data, created a user, and added resources to the API Server, you now have an easily accessible REST API based on the OData protocol for those resources. From the API page in API Server, you can view and copy the API Endpoints for the API:

Connect to Databricks from SAP Lumira

Follow the steps below to retrieve Databricks data into SAP Lumira. You can execute an SQL query or use the UI.

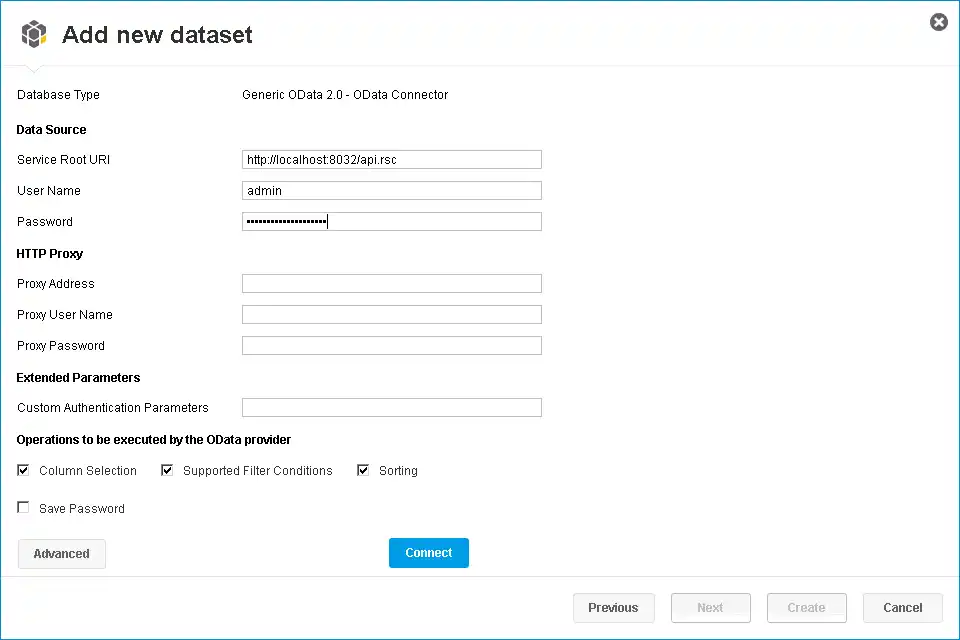

- In SAP Lumira, click File -> New -> Query with SQL. The Add New Dataset dialog is displayed.

- Expand the Generic section and click the Generic OData 2.0 Connector option.

-

In the Service Root URI box, enter the OData endpoint of the API Server. This URL will resemble the following:

https://your-server:8080/api.rsc

-

In the User Name and Password boxes, enter the username and authtoken of an API user. These credentials will be used in HTTP Basic authentication.

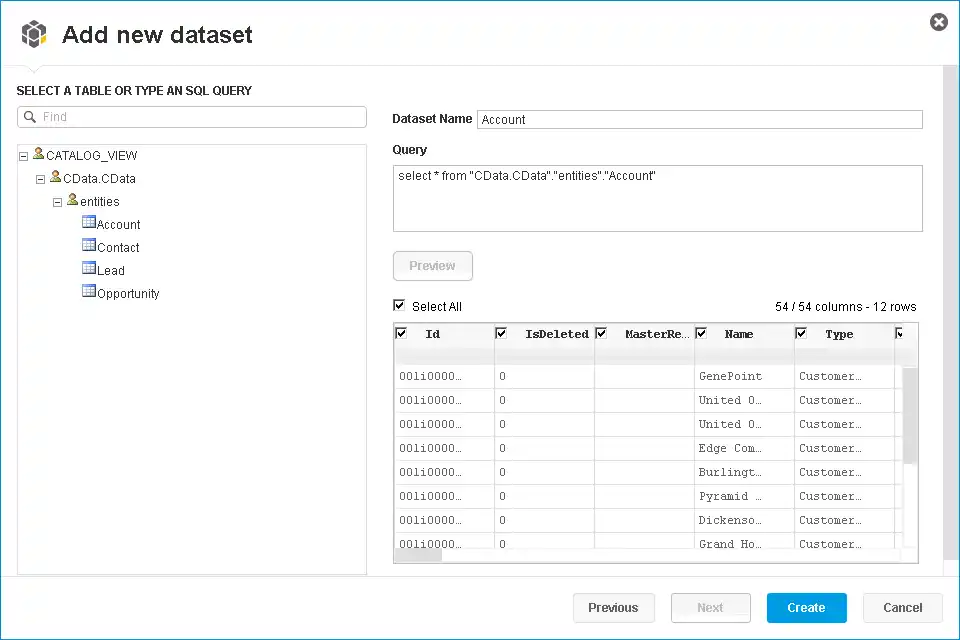

Select entities in the tree or enter an SQL query. This article imports Databricks Customers entities.

-

When you click Connect, SAP Lumira will generate the corresponding OData request and load the results into memory. You can then use any of the data processing tools available in SAP Lumira, such as filters, aggregates, and summary functions.

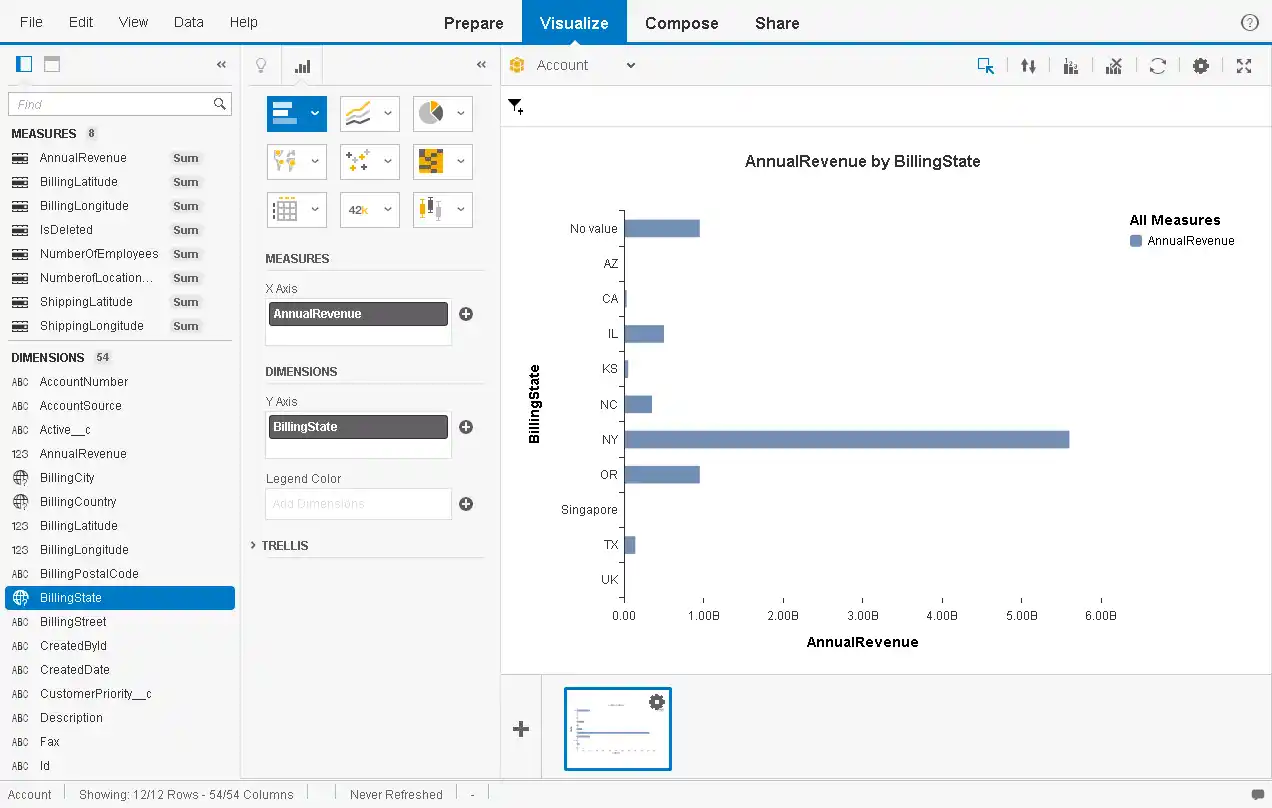

Create Data Visualizations

After you have imported the data, you can create data visualizations in the Visualize room. Follow the steps below to create a basic chart.

In the Measures and Dimensions pane, drag measures and dimensions onto the x-axis and y-axis fields in the Visualization Tools pane. SAP Lumira automatically detects dimensions and measures from the metadata service of the API Server.

By default, the SUM function is applied to all measures. Click the gear icon next to a measure to change the default summary.

- In the Visualization Tools pane, select the chart type.

- In the Chart Canvas pane, apply filters, sort by measures, add rankings, and update the chart with the current Databricks data.