Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Visualize Databricks Data in TIBCO Spotfire through OData

Integrate Databricks data into dashboards in TIBCO Spotfire.

OData is a major protocol enabling real-time communication among cloud-based, mobile, and other online applications. The CData API Server provides Databricks data to OData consumers like TIBCO Spotfire. This article shows how to use the API Server and Spotfire's built-in support for OData to access Databricks data in real time.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Set Up the API Server

If you have not already done so, download the CData API Server. Once you have installed the API Server, follow the steps below to begin producing secure Databricks OData services:

Connect to Databricks

To work with Databricks data from TIBCO Spotfire, we start by creating and configuring a Databricks connection. Follow the steps below to configure the API Server to connect to Databricks data:

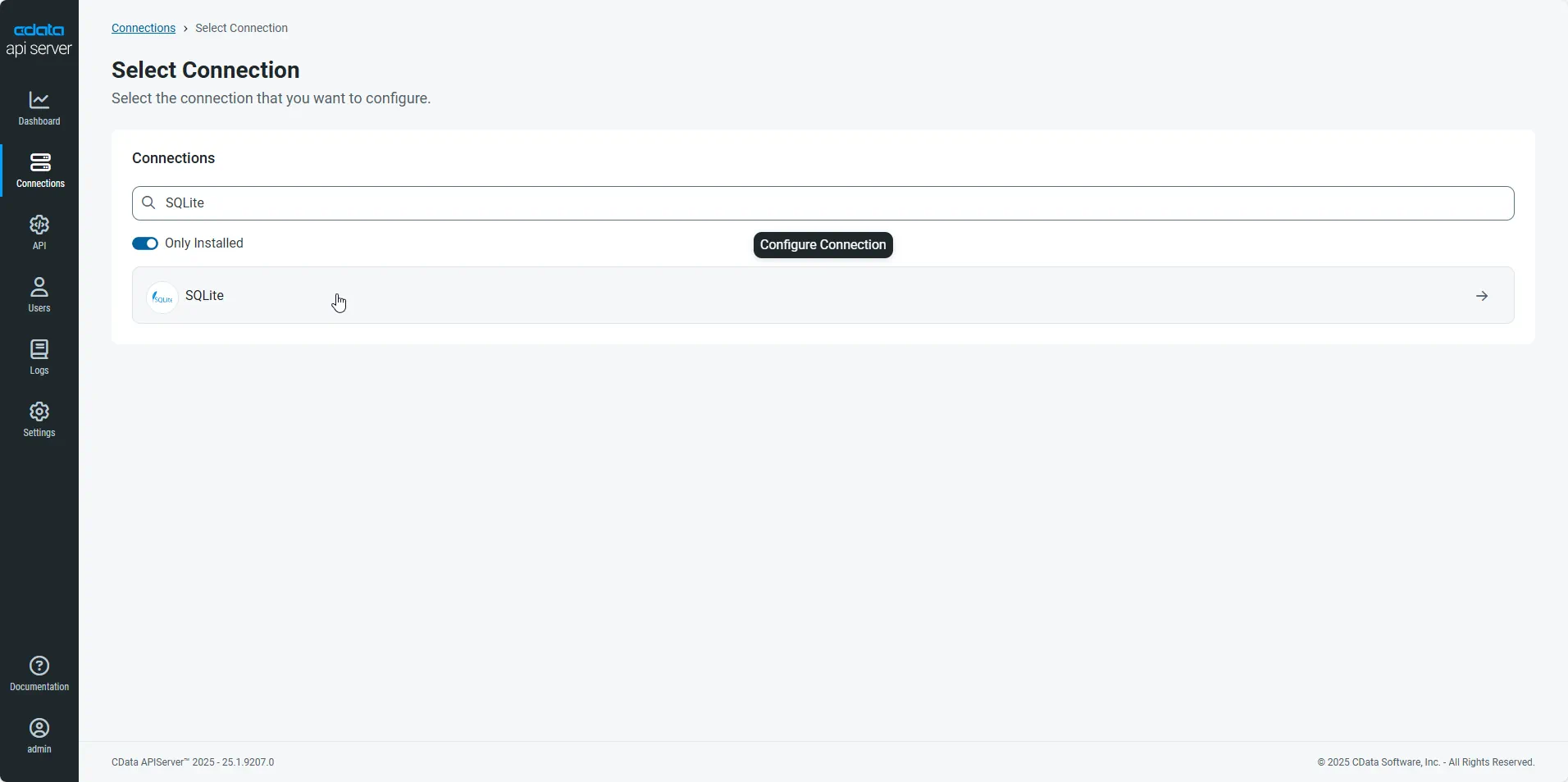

- First, navigate to the Connections page.

-

Click Add Connection and then search for and select the Databricks connection.

-

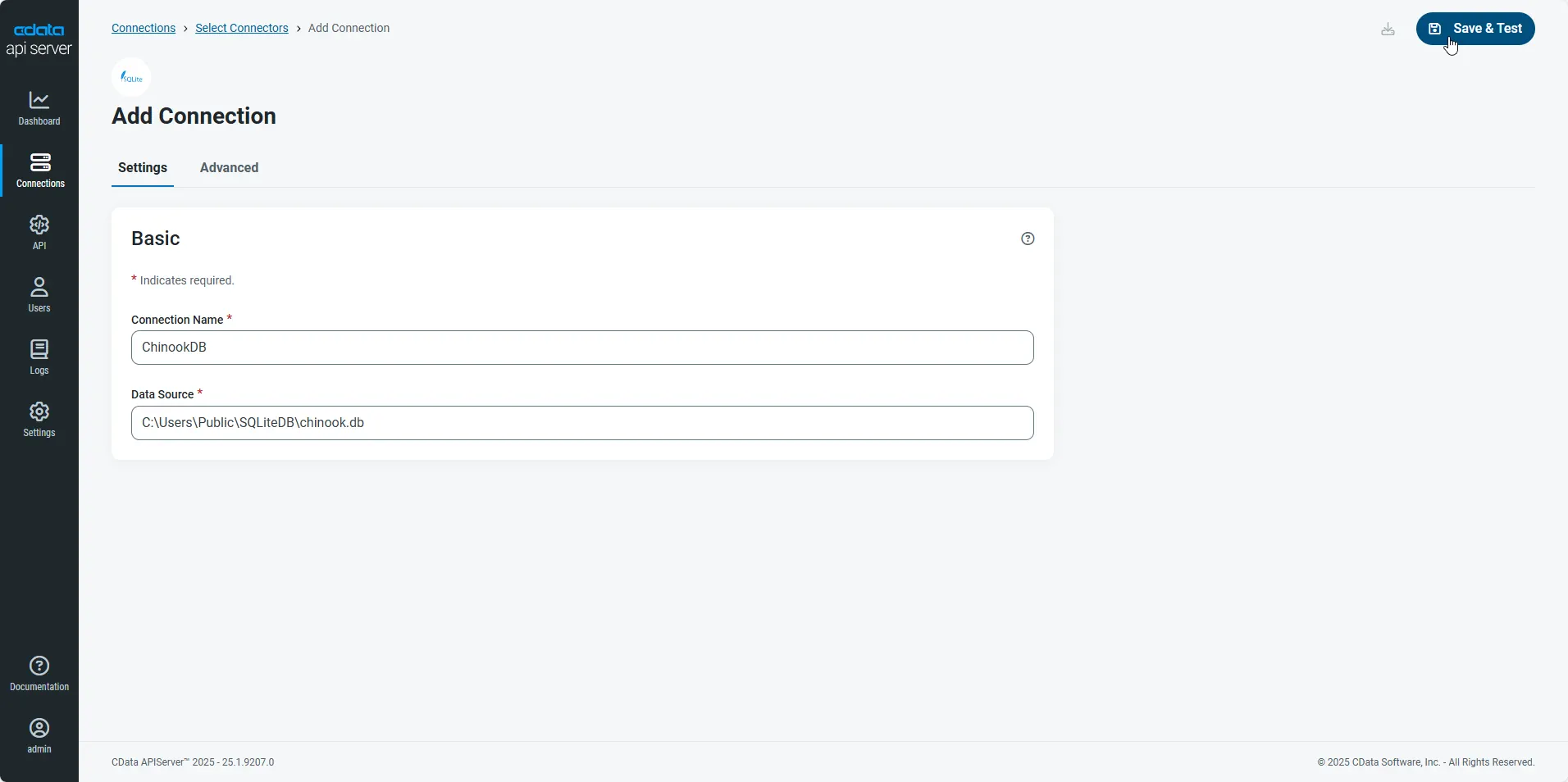

Enter the necessary authentication properties to connect to Databricks.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

- After configuring the connection, click Save & Test to confirm a successful connection.

Configure API Server Users

Next, create a user to access your Databricks data through the API Server. You can add and configure users on the Users page. Follow the steps below to configure and create a user:

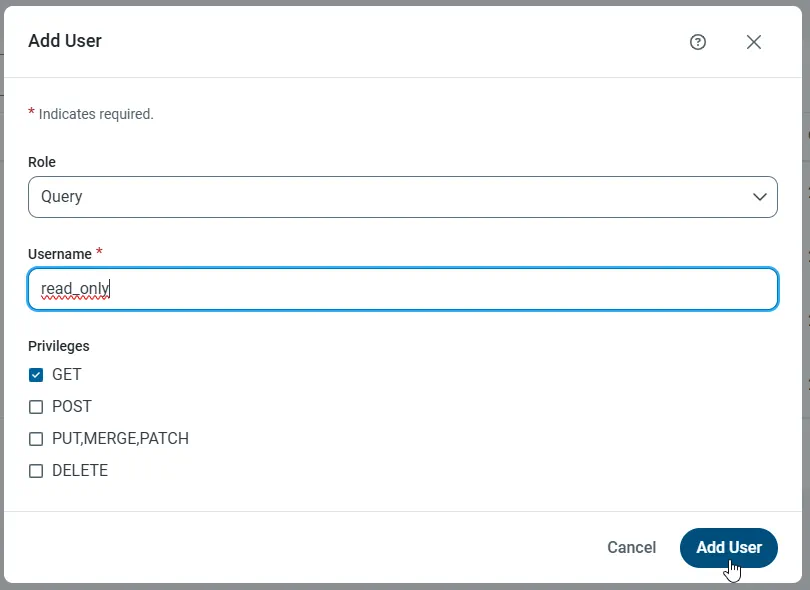

- On the Users page, click Add User to open the Add User dialog.

-

Next, set the Role, Username, and Privileges properties and then click Add User.

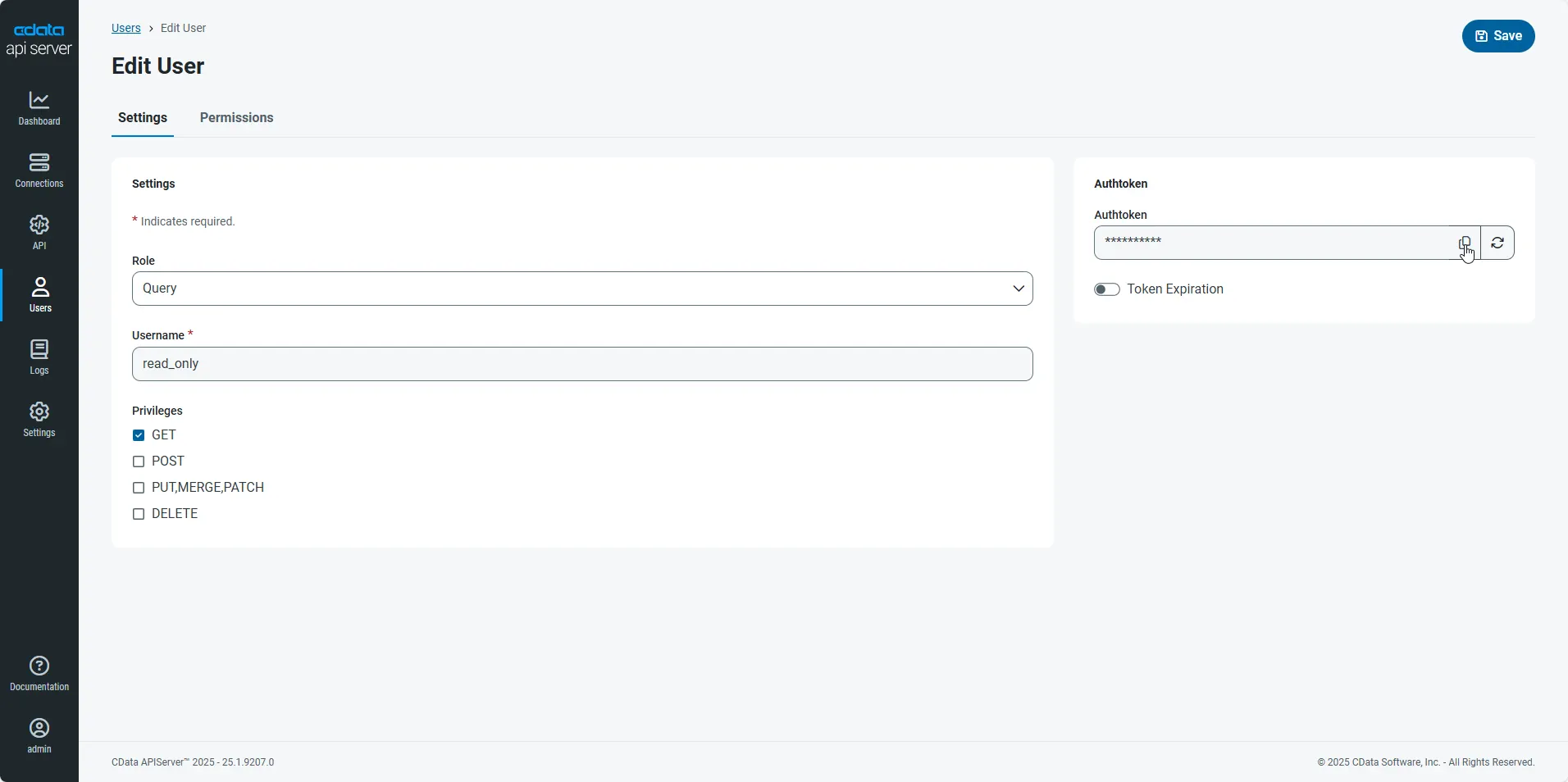

-

An Authtoken is then generated for the user. You can find the Authtoken and other information for each user on the Users page:

Creating API Endpoints for Databricks

Having created a user, you are ready to create API endpoints for the Databricks tables:

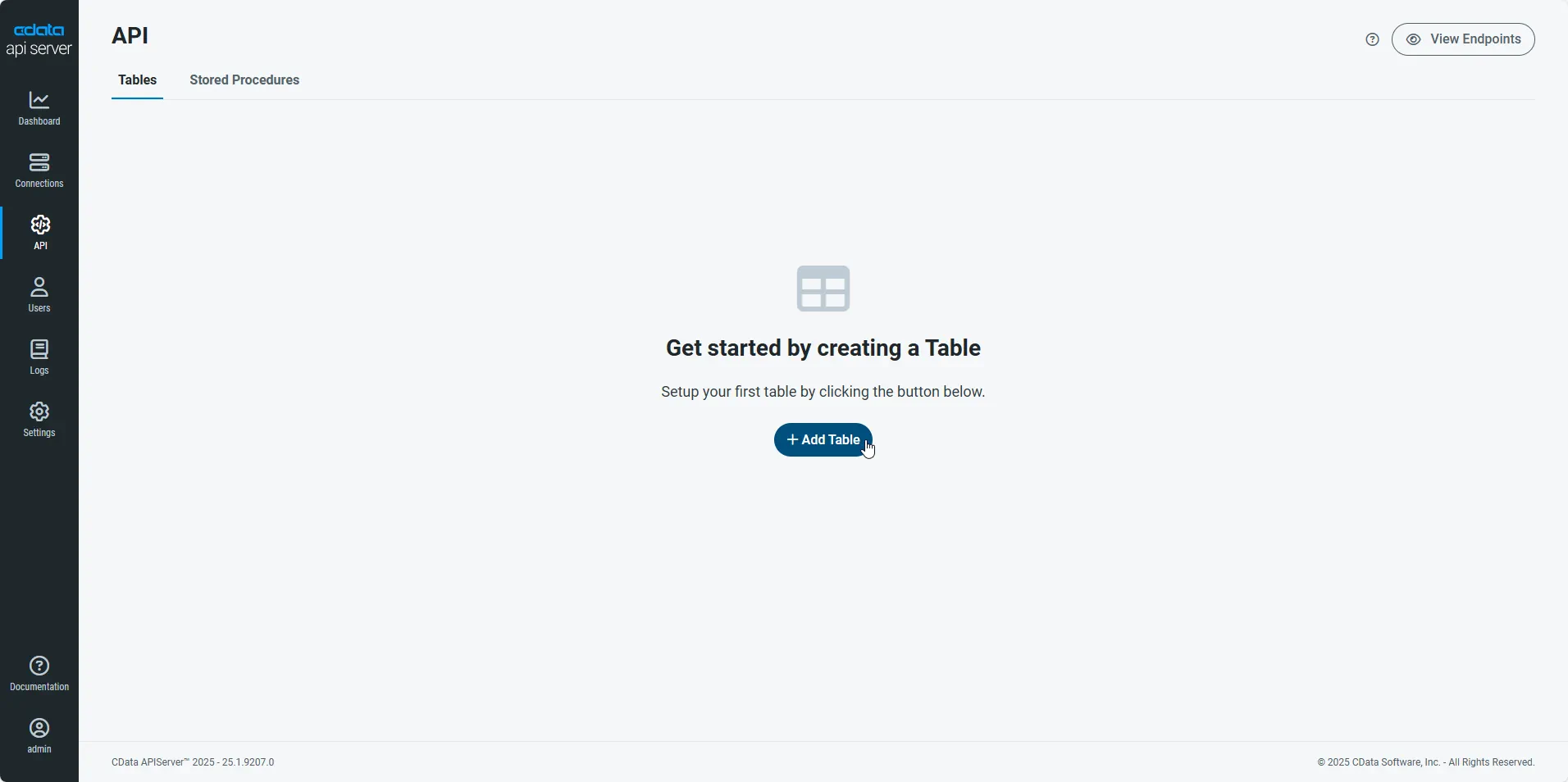

-

First, navigate to the API page and then click

Add Table

.

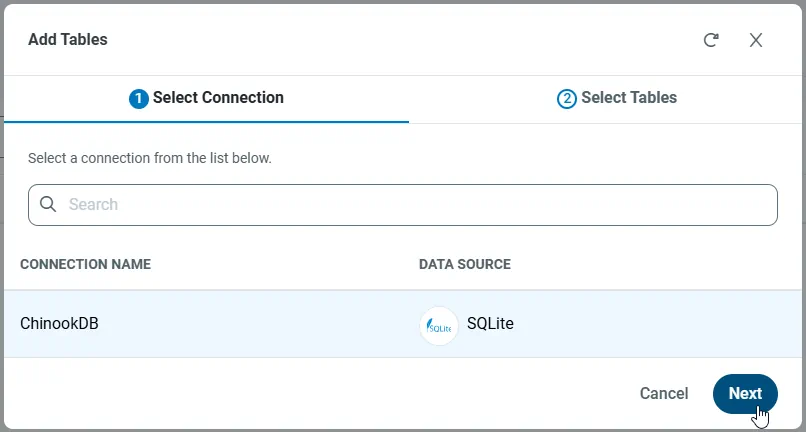

-

Select the connection you wish to access and click Next.

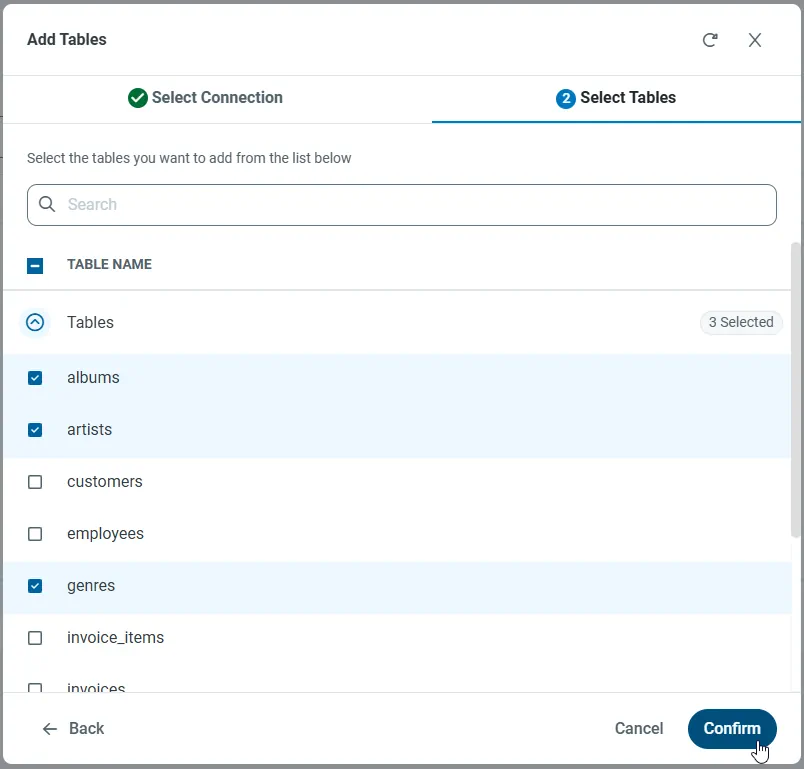

-

With the connection selected, create endpoints by selecting each table and then clicking Confirm.

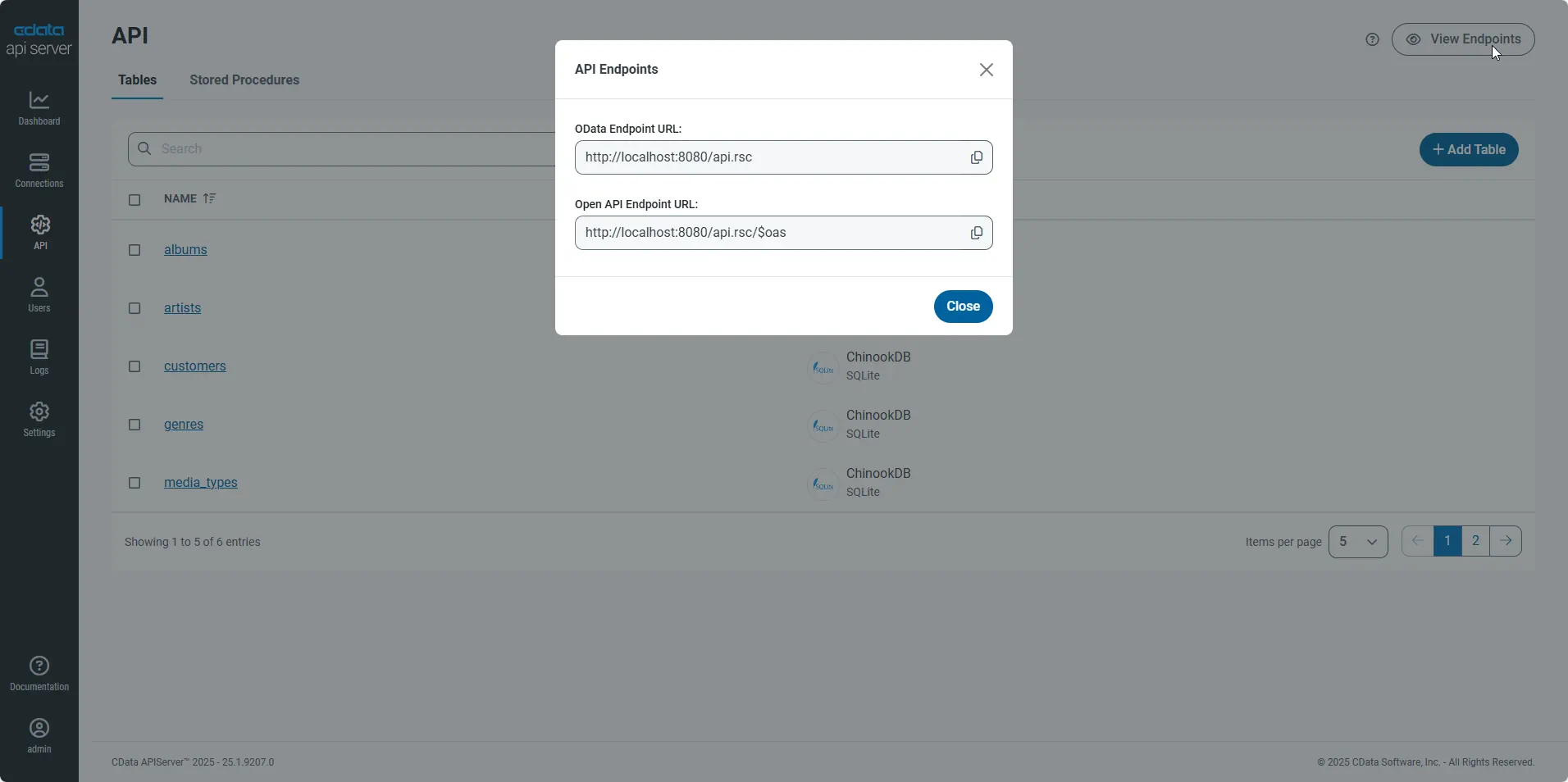

Gather the OData Url

Having configured a connection to Databricks data, created a user, and added resources to the API Server, you now have an easily accessible REST API based on the OData protocol for those resources. From the API page in API Server, you can view and copy the API Endpoints for the API:

Create Data Visualizations on External Databricks Data

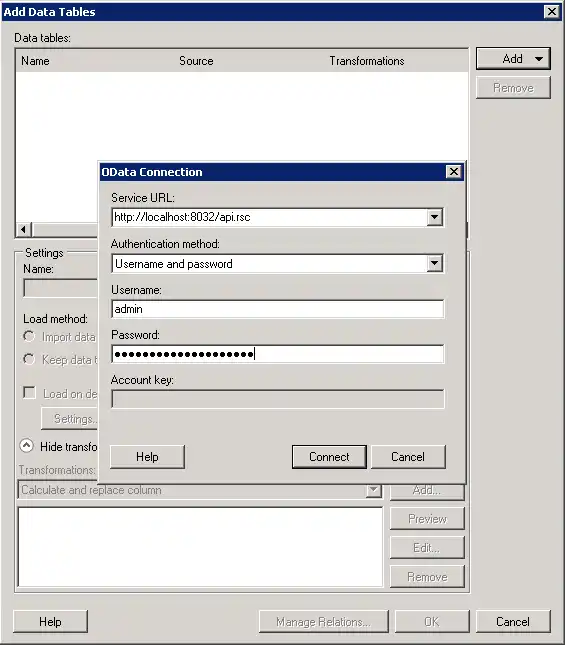

- Open Spotfire and click Add Data Tables -> OData.

- In the OData Connection dialog, enter the following information:

- Service URL: Enter the API Server's OData endpoint. For example:

http://localhost:8080/api.rsc

- Authentication Method: Select Username and Password.

- Username: Enter the username of an API Server user. You can create API users on the Security tab of the administration console.

- Password: Enter the authtoken of an API Server user.

- Service URL: Enter the API Server's OData endpoint. For example:

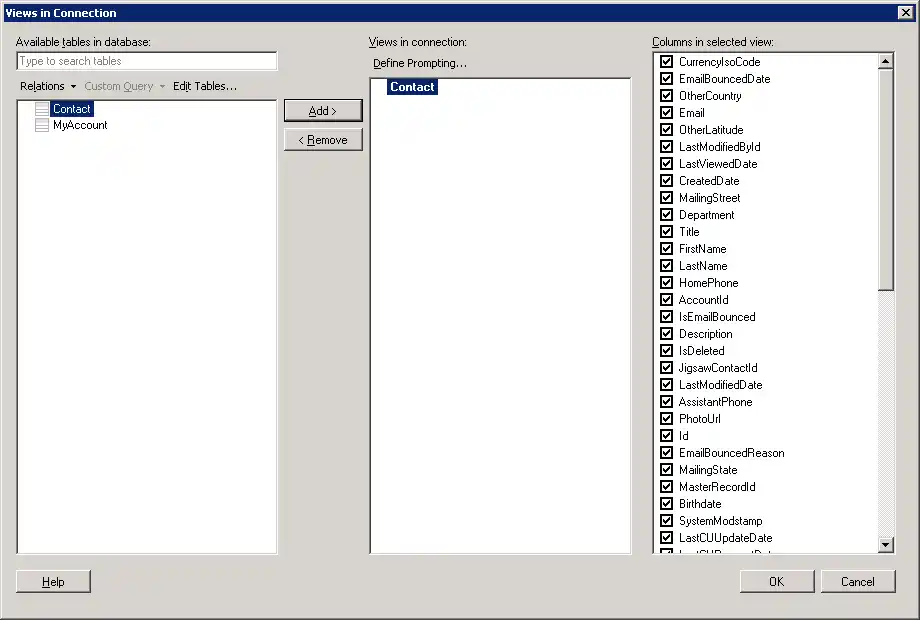

- Select the tables and columns you want to add to the dashboard. This example uses Customers.

- If you want to work with the live data, click the Keep Data Table External option. This option enables your dashboards to reflect changes to the data in real time.

If you want to load the data into memory and process the data locally, click the Import Data Table option. This option is better for offline use or if a slow network connection is making your dashboard less interactive.

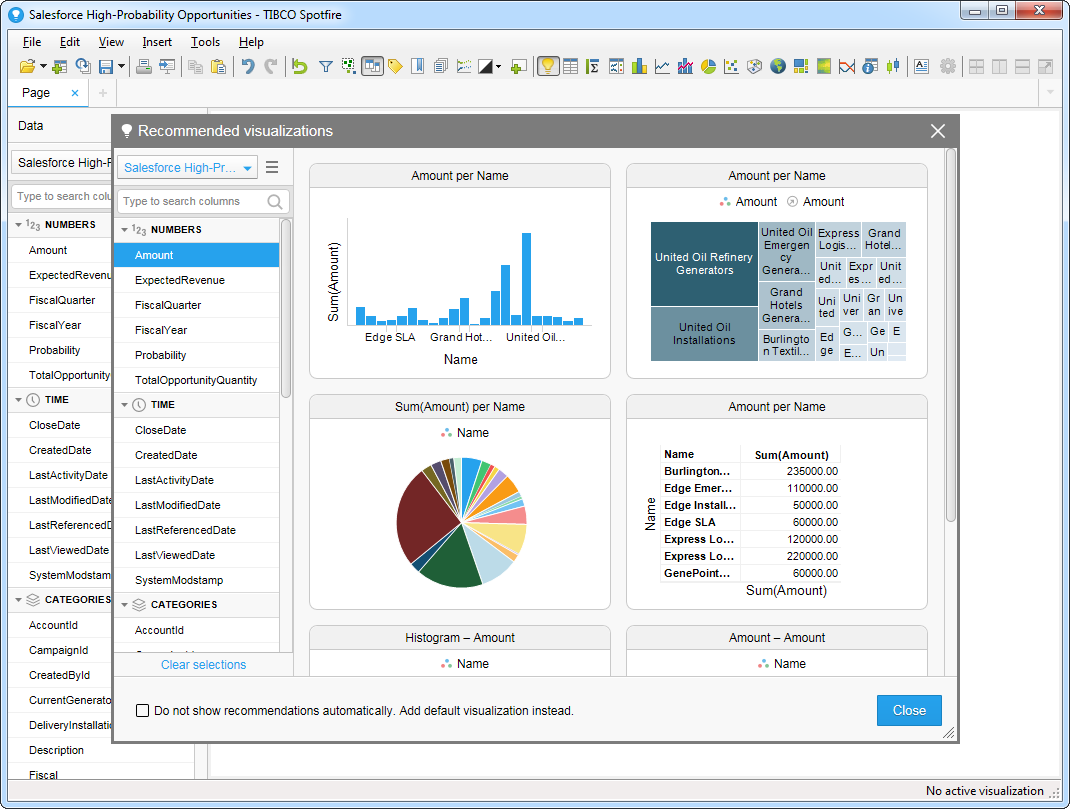

- After adding tables, the Recommended Visualizations wizard is displayed. When you select a table, Spotfire uses the column data types to detect number, time, and category columns. This example uses CompanyName in the Numbers section and City in the Categories section.

After adding several visualizations in the Recommended Visualizations wizard, you can make other modifications to the dashboard. For example, you can apply a filter: After clicking the Filter button, the available filters for each query are displayed in the Filters pane.