Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Extract, Transform, and Load Databricks Data in Informatica PowerCenter

Create a simple Workflow for Databricks data in Informatica PowerCenter.

Informatica provides a powerful, elegant means of transporting and transforming your data. By utilizing the CData ODBC Driver for Databricks, you are gaining access to a driver based on industry-proven standards that integrates seamlessly with Informatica's powerful data transportation and manipulation features. This tutorial shows how to create a simple Workflow in Informatica PowerCenter to extract Databricks data and load it into a flat file.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Add Databricks as an ODBC Data Source

If you have not already, install the driver on the PowerCenter server and client machines. On both machines, specify the connection properties in an ODBC DSN (data source name). This is the last step of the driver installation. You can use the Microsoft ODBC Data Source Administrator to create and configure ODBC DSNs.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

Create an ETL Workflow in PowerCenter

Follow the steps below to create a workflow in PowerCenter to pull Databricks data and push it into a flat file.

Create a Source Using the ODBC Driver

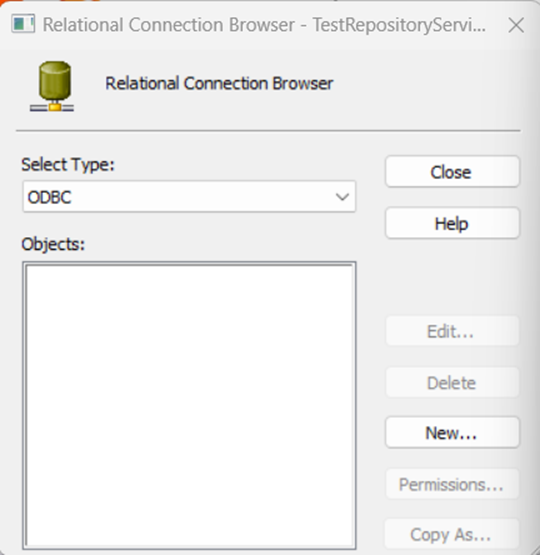

- In PowerCenter Workflow Manager, add a new ODBC relational connection by going to Connections -> Relational, and in the first window select the ODBC type and click New.

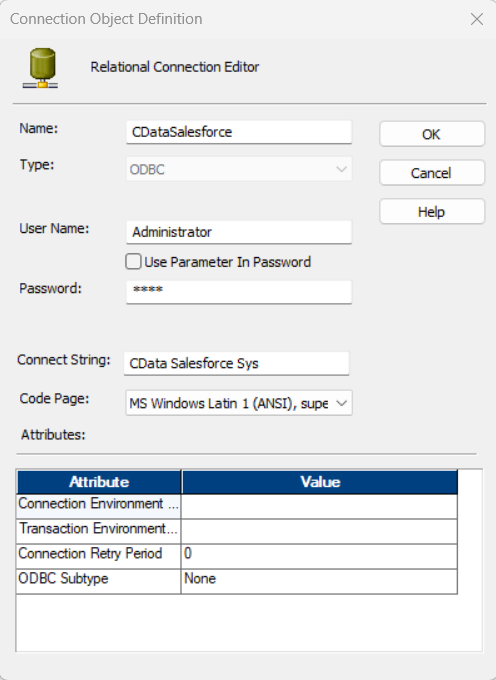

- In the Relational Connection Editor, set the name of the newly created connection and set the User Name and Password based on the credentials utilized to connect to your Repository. Under the Connection String input your System DSN, which by default is called 'CData Databricks Sys'.

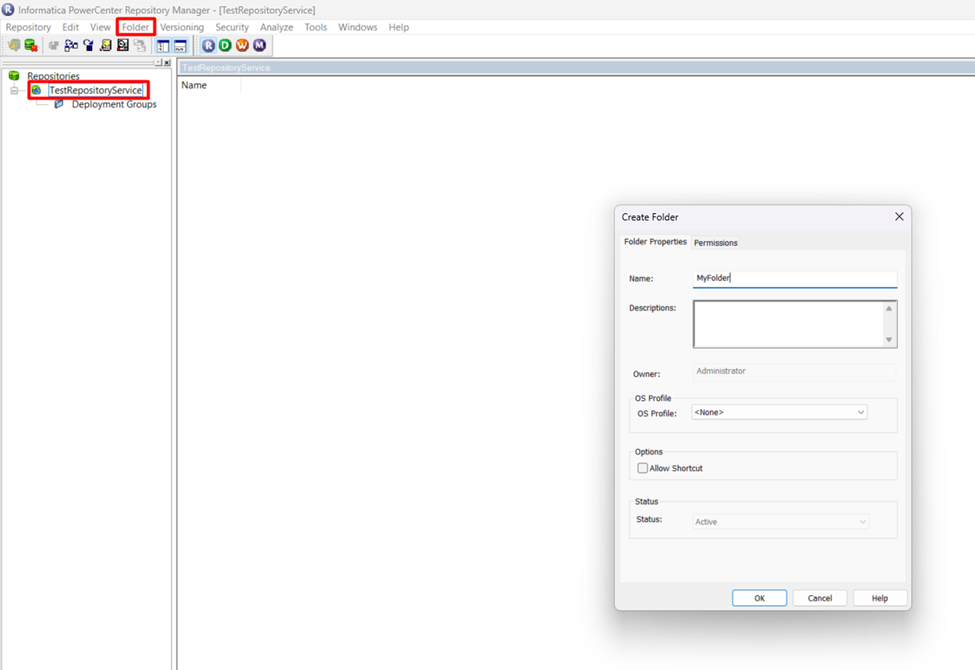

- In PowerCenter Designer, connect to your repository and open your folder. If you do not already have a folder created, you will need to manually create a folder for your repository. To create a folder, open Informatica PowerCenter Repository Manager, connect to your Repository Service and go to Folder -> Create to create a new folder.

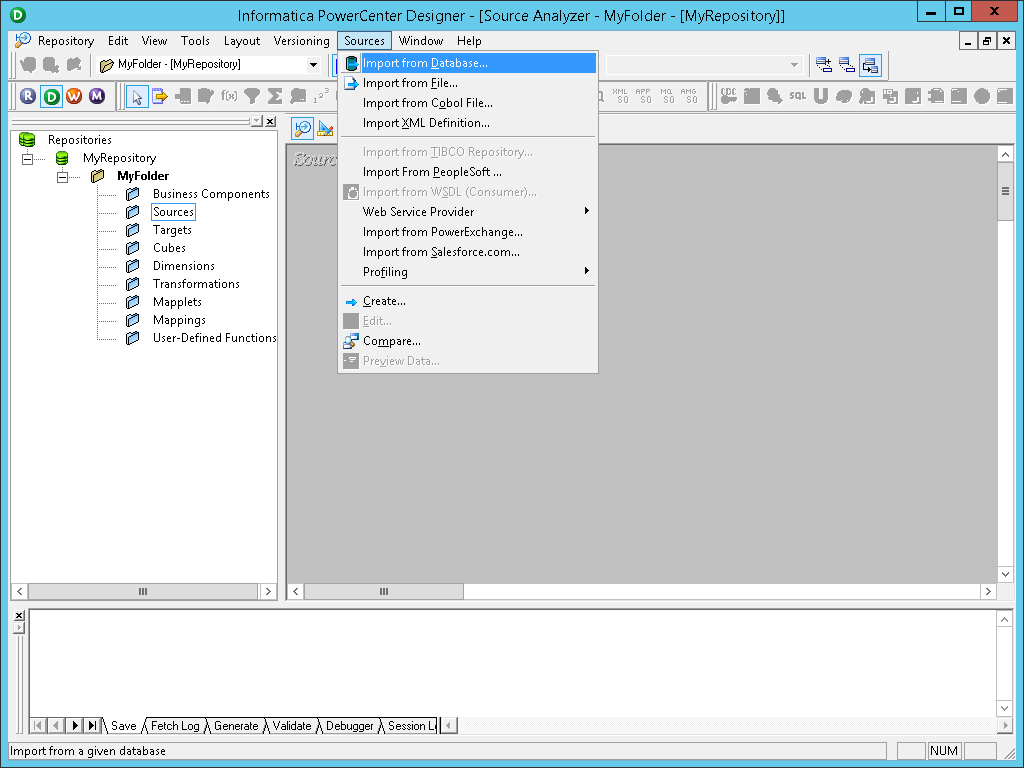

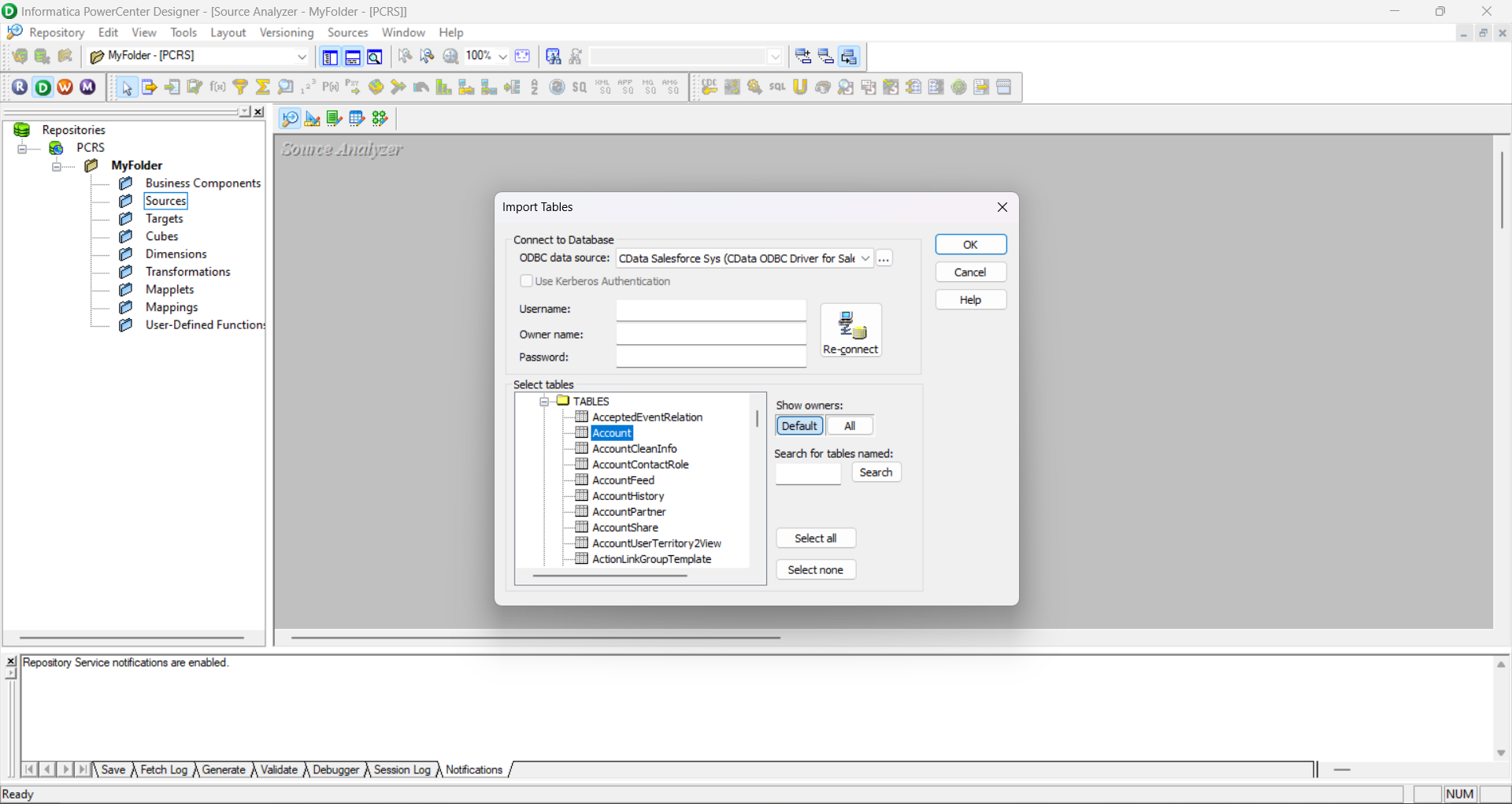

- Select the Source Analyzer, click the sources menu, and select Import from Database...

- In the drop-down menu for ODBC data source, select the DSN you previously configured (CData Databricks Sys).

- Click connect and select the tables and views to include.

- Click OK.

Create a Flat File Target Based on the Source

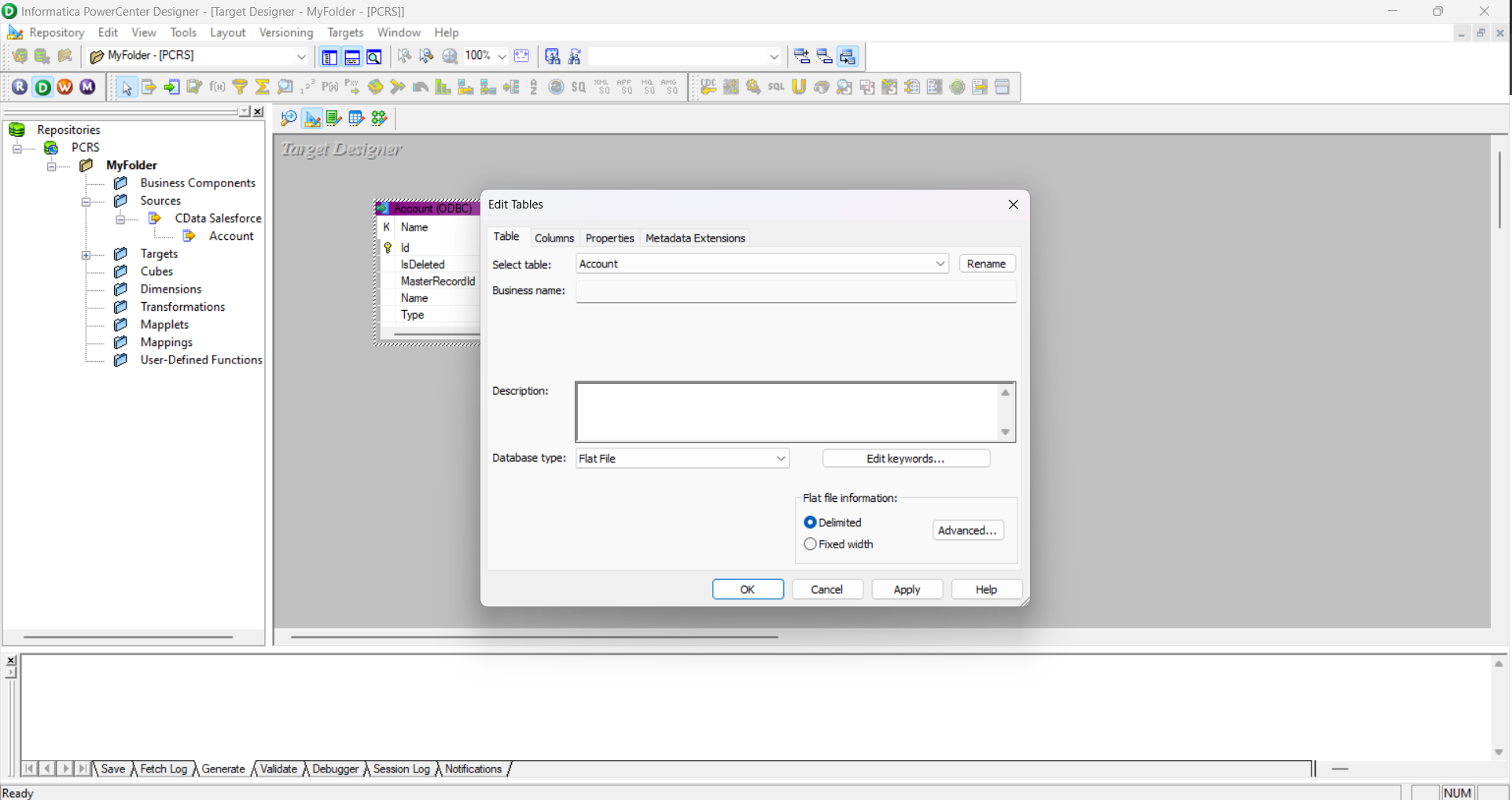

- Select the Target Designer and drag and drop the previously created source onto the workspace. Using the existing source copies the columns into the target.

- Right-click the new target, click edit, and change the database type to flat file.

Create a Mapping to Between Databricks Data and a Flat File

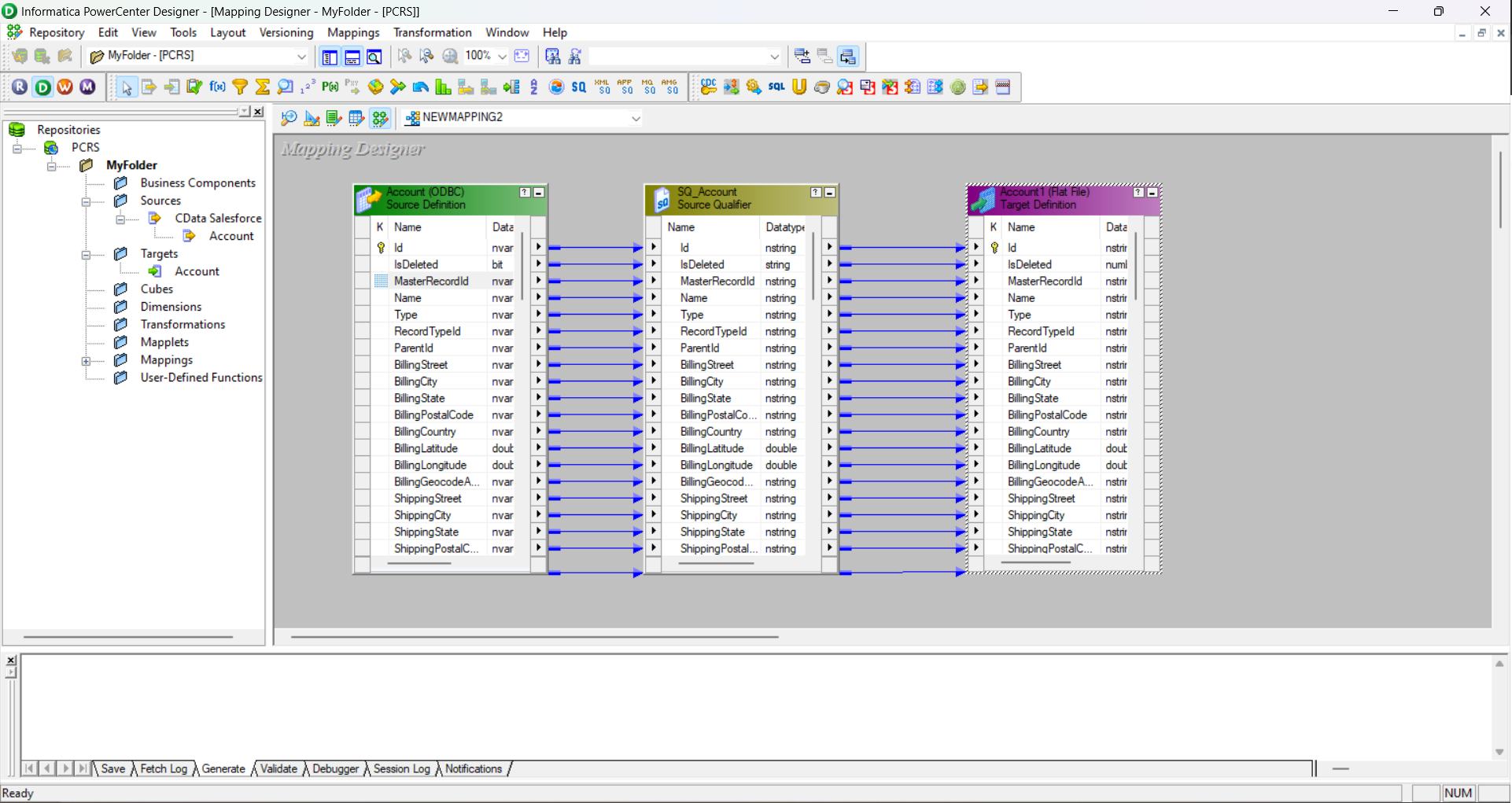

- Click on the Mapping Designer.

- Drag the source and target to the workspace (name the new mapping, if prompted).

- Right-click on the workspace and select Autolink by Name.

- Drag the columns from the source qualifier to the target definition.

- Save the folder (Ctrl + S).

Create Workflow Based on the Mapping

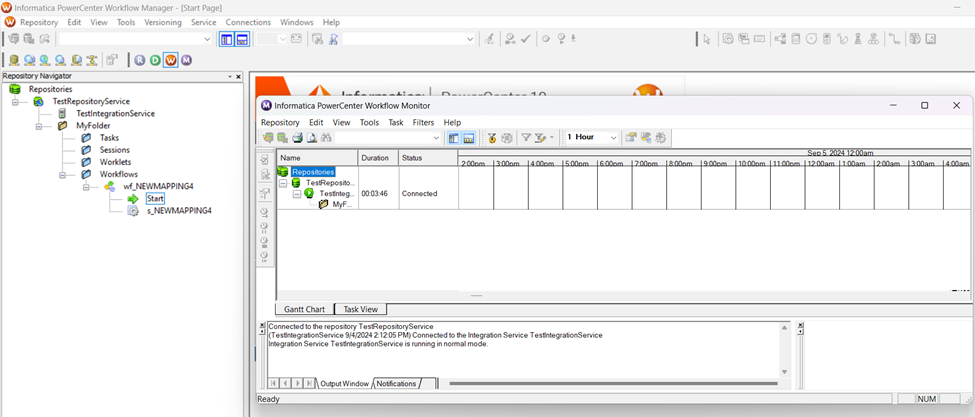

With the source, target, and mapping created and saved, you are now ready to create the workflow.

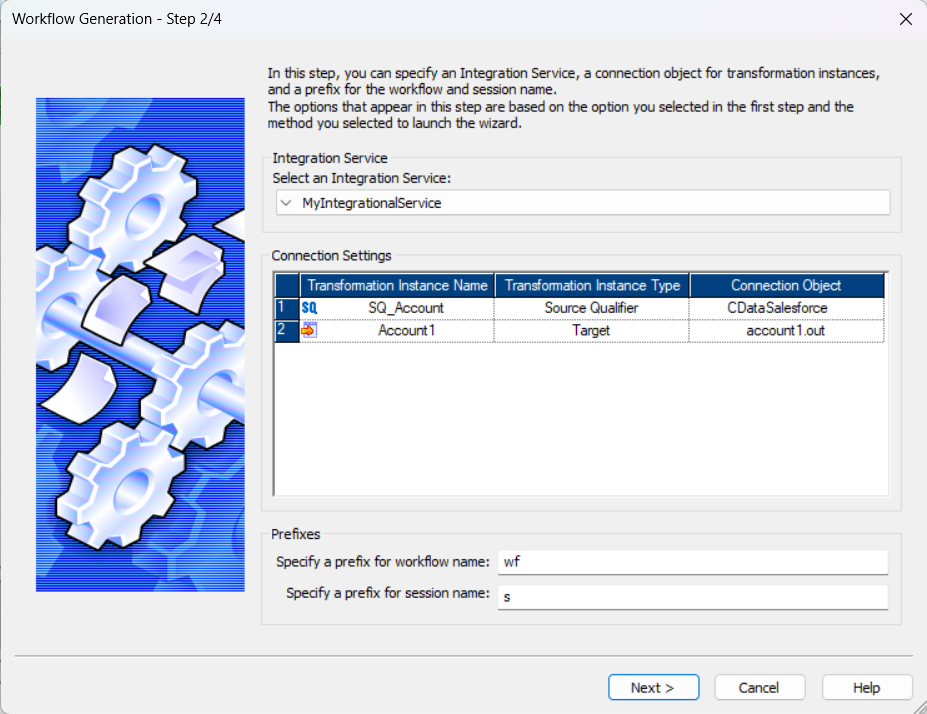

- Right-click the mapping and select generate workflow to open the Workflow Generation wizard.

- Create a workflow with a non-reusable session.

- Ensure that you have properly configured the connection object and set the prefixes. If you have not already done so, you will need to create an Integration Service. To create an Integration Service, open your Administrator site, and under Domain, create a new PowerCenter Integration Service. Under the PowerCenter Repository Service, select the Repository you are referring to, and as Username and Password, use your Administrator login information.

- Configure the Connection as needed.

- Review the Workflow and click Finish.

With a workflow created, you can open the PowerCenter Workflow Manager to access and start the workflow, quickly transferring Databricks data into a flat file. With the CData ODBC Driver for Databricks, you can configure sources and targets in PowerCenter to integrate Databricks data into any of the elegant and powerful features in Informatica PowerCenter.